The Ethics of AI: Where Do We Draw the Line?

The Ethics of AI: Where Do We Draw the Line? 2025

Key Takeaways

AI ethics is no longer optional—it’s a business imperative that builds trust, drives compliance, and fuels sustainable growth. These key points help startups and SMBs embed ethical AI quickly and effectively.

- Consider the impact of AI on society by recognizing how societal biases can influence AI systems and ensuring ethical practices benefit all.

- Embed fairness, transparency, and accountability from day one, emphasizing responsible use of AI to turn ethics into a competitive edge that wins user trust and regulatory approval.

- Keep human oversight central by integrating “human-in-the-loop” controls, especially for high-risk or sensitive AI decisions.

- Adopt bias testing and continuous auditing tools early to identify and fix skewed outputs before they harm users or invite legal risk.

- Build lightweight governance programs with cross-functional ethics teams to operationalize compliance without slowing innovation.

- Prioritize transparency with explainability tools and user notifications to make AI decisions clear, boosting user confidence and dispute resolution.

- Address privacy concerns by safeguarding data collection and user consent to ensure privacy and data protection are central to your AI strategy.

- Balance AI innovation with environmental responsibility by choosing energy-efficient algorithms and eco-conscious cloud providers to cut carbon footprints.

- Stay proactive on evolving regulations like the EU AI Act by maintaining open communication and documentation to avoid surprises and fines.

- Cultivate a culture of curiosity and accountability where ethics is a shared priority that drives continuous learning and practical action.

Start applying these insights now to build AI products that don’t just move fast—they move right. Dive into the full article to explore strategic frameworks and examples that make ethical AI approachable for your team.

Introduction

What happens when the technology designed to make our lives easier starts making decisions on its own—sometimes in ways we don’t fully understand? With AI advancing faster than regulations can keep up, it's clear that what was once the domain of science fiction is now a reality with significant consequences. Figuring out where to draw ethical lines—that is, drawing clear boundaries in AI development and use—isn’t just philosophical; it’s critical for startups and SMBs ready to build trust and avoid costly missteps.

You don’t have to be a compliance expert or a tech giant to navigate AI ethics effectively. This guide breaks down practical strategies to embed fairness, transparency, and accountability into your AI projects without killing your momentum, while considering AI's impact on both business and society.

Here’s what you’ll get:

- A clear look at evolving ethical principles turned operational must-haves

- Insights into the regulatory landscape shaping AI development worldwide

- Tactics to handle complex challenges like bias, privacy, and human oversight

- Ways to transform ethics compliance into a competitive advantage for growth

As AI becomes a core part of your product’s DNA, understanding these fundamentals not only protects your business but also builds the kind of user trust that powers lasting success.

The next section delves into the foundational principles reshaping AI ethics in 2025 and why moving from broad ideas to hands-on application is essential for every team pushing AI forward.

Understanding the Foundations of AI Ethics in 2025

AI ethics is all about making sure artificial intelligence acts responsibly and fairly (Ethics of artificial intelligence). As AI evolves rapidly—think systems that plan and learn on their own—ethical considerations have shifted from nice-to-have guidelines to urgent, operational must-haves. Developers play a crucial role in ensuring that AI systems are designed and deployed with fairness, transparency, and accountability in mind. Ethical responsibility is now recognized as a core value in AI development, guiding decisions to address issues like bias, privacy, and ownership. These ethical principles matter because the impact of AI extends beyond individuals to society as a whole, influencing fairness, trust, and social outcomes.

The Shift From Principles to Practice

What started as broad ethical principles is now tied to everyday AI development, especially with regulations like the European Union’s AI Act enforcing transparency, fairness, and human oversight.

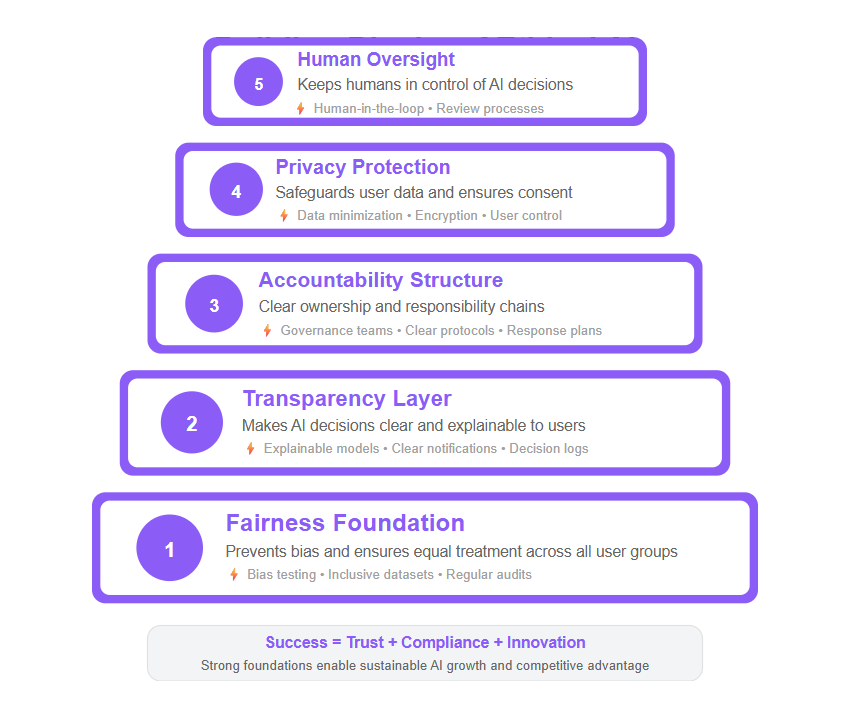

Core ethical principles you need to keep front and center include:

- Fairness: Preventing AI from reinforcing biases or discrimination; this includes conducting bias auditing using tools like IBM AI Fairness 360 or Google's What-If Tool to ensure models are evaluated for fairness before deployment.

- Transparency: Making AI decisions explainable to users and regulators

- Accountability: Knowing who’s responsible when AI goes wrong

- Privacy: Protecting user data in compliance with laws and ethics

- Human Oversight: Keeping people “in the loop” to guide or override AI decisions

Operationalizing these principles means recognizing the responsibilities of marketing agencies, firms, and partners to manage the ethical impact of AI tools, especially regarding automation, personalization, and consumer trust.

These don’t just sound good—they help companies build trust with users, partners, and regulators alike. Picture this: your AI-powered product delivers personalized recommendations, but if the process feels like a black box, users might drop off fast. Ethics make that process clear and fair.

Why Ethics Matter More Than Ever

Ethical AI isn’t just about avoiding fines or PR crises. It’s about creating products that users feel confident using day-to-day. When AI respects privacy, manages bias, and provides clear explanations, it drives better engagement and long-term growth. The influence of AI on user decisions is significant, making it essential to prioritize ethical considerations to maintain trust.

Think of ethics like a GPS system for AI innovation—guiding you away from costly detours like legal troubles or user backlash. Organizations embedding ethics early cut risk and boost their reputation, positively impacting public opinion.

Quick Takeaways:

- Embed fairness, transparency, and accountability into your AI design from day one.

- Keep human judgment part of AI decisions, especially in sensitive applications.

- Transparency isn’t optional—it’s the key to earning and maintaining user trust.

This approach turns ethical AI from a headache into a competitive edge, differentiating your startup or SMB in the crowded 2025 landscapes.

By operationalizing these ethical foundations, you ensure your AI products don’t just work fast—they work right.

Regulatory Landscape Shaping Ethical AI

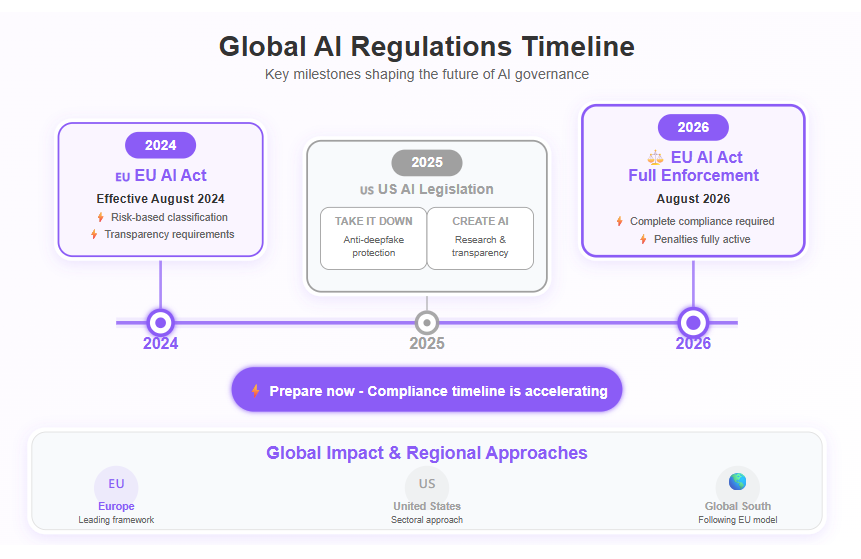

Major Global Regulatory Frameworks

The European Union’s AI Act, effective since August 2024, categorizes AI systems by risk level and enforces strict compliance for high-risk uses like healthcare and law enforcement. Marketing agencies, along with other organizations, are among the stakeholders affected by these regulations.

Key features include:

- A risk-based approach that tailors requirements to AI impact

- Transparency mandates with human oversight and data governance rules, including the protection of sensitive data through access controls and content tracking

- Full enforcement expected by August 2026, pushing organizations to prepare now

In the United States, notable laws advancing in 2025 are:

- The TAKE IT DOWN Act, targeting nonconsensual AI deepfake distribution, especially intimate content

- The CREATE AI Act, aiming to expand AI research resources for public use and improve transparency

Across LATAM and other regions, governments are increasingly adopting regulatory frameworks focused on integrating ethical AI into enterprise deployments, often mirroring EU’s risk-based principles.

Think of these laws as guardrails steering AI toward safer, fairer deployments worldwide.

Regulatory Impact on AI Development and Use

Regulations today enforce critical ethical pillars like:

- Fairness through bias mitigation requirements

- Mandatory transparency, including explainability and user notifications

- Compulsory human oversight for high-risk AI applications

These regulations increasingly impact AI-driven content creation, requiring organizations to ensure ethical standards and transparency in automated content production.

Auditing AI for compliance is a growing challenge since many models are complex and opaque. Current solutions involve:

- Developing technical frameworks for bias testing and algorithm explainability

- Utilizing AI tools for compliance monitoring and auditing processes

- Establishing cross-industry audit standards and responsible AI teams

Governments and industries are increasingly collaborating to share best practices and close enforcement gaps. This partnership helps keep AI development aligned with evolving societal values without stifling innovation.

Picture organizations setting up live audit dashboards that continuously flag ethical risks during product development—that’s where this trend is headed.

The Transformative Role of AI Ethics Regulations in 2025

New laws are reshaping AI governance by:

- Requiring companies to embed ethics into every project phase, from design to deployment, and to promote the ethical use of AI technologies

- Forcing a balanced approach that stimulates innovation while ensuring compliance

- Motivating firms to proactively build governance frameworks and ethics training programs

Forward-thinking startups and SMBs can turn ethics compliance into a business advantage by:

- Leveraging the promise of ethical AI compliance to boost user trust and brand reputation

- Reducing legal and operational risks before they hit the market

- Attracting partners and investors focused on responsible tech

For example, a mid-size LATAM firm recently accelerated approval timelines by integrating EU AI Act compliance early—a smart move that saved months of rework down the line.

Ethical AI regulations in 2025 aren’t just legal hurdles—they’re strategic game plans for sustainable growth.

Embedding these emerging regulatory demands into your dev process means fewer surprises and more control over your AI’s impact.

Starting today, think of ethics and compliance as vital business gears—not optional extras—to power your AI projects forward safely and confidently.

Embedding Ethical Principles into AI Operations

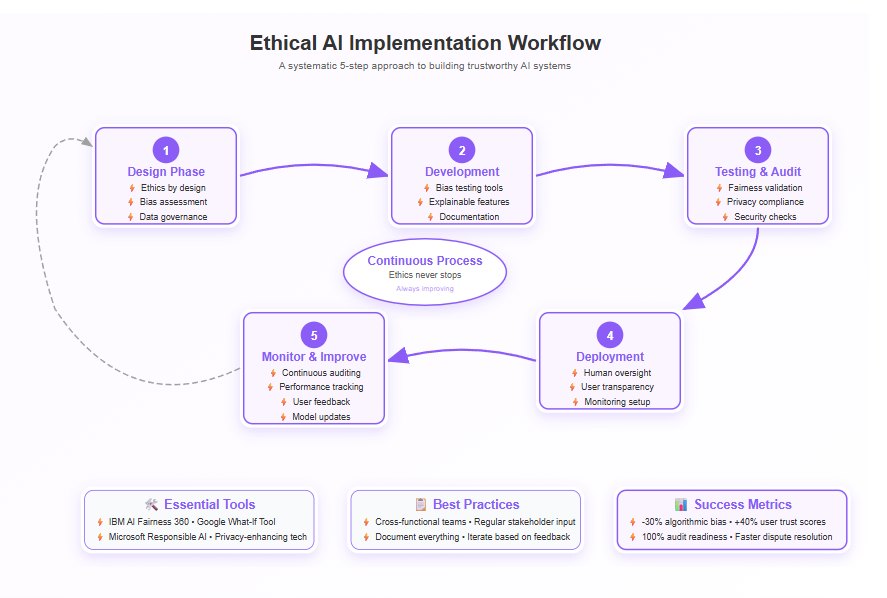

From Principles to Practice: Operationalizing AI Ethics

Ethics isn’t just a buzzword anymore—it’s a business imperative. Organizations are moving from vague guidelines to concrete AI governance structures that embed ethics into day-to-day workflows. Digital literacy is essential for teams to ensure ethical AI operations, as it equips professionals with the skills to critically assess and responsibly use AI tools.

This means building:

- Dedicated AI ethics teams responsible for oversight

- Clear governance programs tracking ethical compliance

- Ethics checkpoints integrated into every stage of AI development—from design to deployment

Picture this: before releasing a new model, your team runs it through a bias and transparency review workflow, ensuring it meets both legal standards and your company’s values.

5 Strategic Approaches to Embedding Ethics in AI Development

To keep ethics practical without slowing innovation, teams can adopt these five key strategies:

Multidisciplinary collaboration: Involve ethicists, engineers, and domain experts early

Transparent design: Make AI systems explainable and user-friendly

Bias mitigation: Use testing tools to spot and fix skewed outputs

Continuous auditing: Regularly check AI behavior over time

Stakeholder engagement: Get feedback from end-users, impacted groups, and ensure a deep understanding of the audience to better engage and address their needs

For startups and SMBs, starting lean is okay—focus on bias testing and clear documentation first, then scale up ethics processes alongside your product roadmap.

Why Human Oversight Is Essential in AI Decision-Making

Despite strides in autonomy, human judgment remains critical—especially when AI impacts health, finance, or legal decisions.

Effective human-AI partnerships:

- Prevent unintended harms by catching edge cases AI misses

- Increase accountability by letting humans review flagged AI actions

- Allow for stronger “human-in-the-loop” approaches rather than fully autonomous systems

AI can efficiently handle repetitive tasks, freeing up human experts to focus on oversight and complex decision-making.

Think of it as driving a car with cruise control: AI handles the routine while humans keep hands on the wheel ready to react.

Embedding ethics is no longer optional—it’s how you build trust and keep AI aligned with human values. Starting with basic governance and human oversight can protect your business today while preparing you for evolving regulations that demand transparency and fairness.

Tackling Key Ethical Challenges in AI Deployment

Addressing Bias, Transparency, and Fairness

AI bias often hides in the data on which systems are trained, or in design choices, risking systemic inequalities when deployed. These biases often reflect those present in society, meaning that societal prejudices can be embedded in AI outcomes.

Key steps to spot and fix bias include:

- Using automated bias detection tools to flag disparities in outputs

- Applying frameworks like fairness-aware machine learning to adjust algorithm decisions

- Engaging diverse teams to assess impact from multiple perspectives

Transparent AI isn’t just nice to have — it’s critical for building user trust and making systems accountable. When users can see how decisions are made, they’re less likely to feel misled or marginalized.

Unlocking Fairness: Tackling AI Bias Head-On

Creating fairness-aware models means going beyond “neutral” and actively respecting diversity.

Proven strategies involve:

Regular bias audits throughout AI lifecycle

Designing for inclusion with representative datasets

Incorporating feedback loops from impacted communities

Look at recent cases: some companies cut algorithmic bias in hiring by 30% after overhauling hiring data.

But remember, biased AI outcomes can perpetuate harms like reinforcing stereotypes or denying opportunities, so staying vigilant is non-negotiable. These impacts extend beyond individuals and organizations, affecting the world at large by shaping societal norms and influencing global perceptions of fairness.

How Transparent AI Systems Unlock Ethical Accountability

Transparency tech has evolved fast — now we have:

- Explainability tools that translate complex models into human-friendly reasons

- Techniques for model interpretability helping engineers spot errors early

- User notifications informing when AI influences user decisions and outcomes

These features don’t just satisfy regulators like the EU AI Act; they empower users and speed up dispute resolution when issues arise.

That said, achieving transparency can be tricky because some AI models (like deep neural nets) are inherently complex — so combining tools with clear communication is key for building trust with audiences.

Mastering AI Privacy: Balancing Innovation and User Rights

AI thrives on data, but privacy must stay front and center. Privacy concerns arise as AI systems analyze large volumes of personal data, raising issues related to data collection without user consent, surveillance, and the ethical implications of balancing convenience with privacy rights.

Best practices include:

- Using privacy-enhancing technologies (PETs) like differential privacy and federated learning

- Enforcing strict data governance with clear consent and purpose limitations, and ensuring the protection of sensitive data through access controls and content tracking

- Implementing data minimization and anonymization to limit exposure

This balance avoids costly privacy breaches—which can hit SMBs with multimillion-dollar fines—while keeping innovation moving.

7 Critical Ethical Challenges Facing AI in 2025

The AI field faces intertwined hurdles:

- Ethical concerns (overarching issues including bias, privacy, misinformation, deepfakes, ownership rights, and the need for responsible development and regulation)

- Bias

- Privacy

- Accountability

- Environmental impact (AI’s growing carbon footprint)

- Misuse and malicious applications (including risks from AI generated content such as realistic fake media, misinformation, and privacy violations)

- Transparency

- Autonomy and control

Addressing one without the others falls short; integrated solutions and ongoing industry initiatives aim to build ethical AI ecosystems proactively.

AI ethics isn’t theoretical — it’s about shaping tools that are fair, clear, and respectful of people. Tackling bias and privacy with transparent strategies turns ethics into a competitive advantage that fuels trust and long-term success.

Emerging Ethical Considerations for Advanced AI Systems

The Rise of Agentic AI and Ethical Implications

Agentic AI refers to autonomous systems that plan and execute complex tasks without human intervention. Think of them as digital agents making decisions on their own, from managing logistics to driving vehicles.

This autonomy introduces unique governance challenges, such as:

- Accountability: Who’s responsible if an agentic AI causes harm?

- Predictability: How do we ensure consistent, safe behavior?

- Alignment with human values: How can we trust that these systems respect our ethical standards? Ensuring a strong sense of ethical appropriateness is crucial, so that agentic AI aligns with social and moral norms.

To keep agentic AI in check, organizations adopt strategies like:

- Implementing strict ethical guardrails embedded in AI code

- Using continuous human-in-the-loop monitoring despite autonomy

- Developing frameworks for transparent decision logs so actions can be audited later

- Drawing clear boundaries for agentic AI to define acceptable behaviors and prevent misuse

Picture an AI delivery drone autonomously rerouting due to weather — should it prioritize speed or safety? Embedding clear ethical priorities upfront helps answer this.

Environmental Impact of AI Technologies

AI’s soaring performance comes with a growing carbon footprint. Training large models can consume megawatt-hours of energy, comparable to the yearly output of entire neighborhoods. Concerns about AI's environmental impact are no longer science fiction—these issues are real and increasingly urgent.

Industry initiatives aim to curb this through:

- Designing energy-efficient algorithms that reduce computation needs Using sustainable data center practices, like renewable energy and advanced cooling

- Publishing carbon impact reports to foster transparency and improvement

Ethical AI development now means balancing innovation with environmental responsibility. For startups, choosing cloud providers with green certifications offers an immediate way to reduce impact.

Several leading companies committed to reduce AI energy use by 30% over the next two years, proving sustainable AI is more than just a buzzword.

Key Takeaways

- Agentic AI needs built-in ethical constraints and human oversight for safe autonomy.

- The environmental cost of AI demands energy-efficient designs and sustainable infrastructure.

- Startups and SMBs can immediately reduce AI’s carbon footprint by selecting eco-conscious partners and embracing the promise of ethical AI.

Emerging AI systems aren’t just about what they can do — they’re defined by how thoughtfully we make them act and the planet they leave behind.

Navigating Enforcement and Compliance Challenges

Regulators face major hurdles auditing AI algorithms due to their opacity and rapid evolution. Unlike traditional software, many AI models—especially deep learning systems—function as “black boxes,” making it tough to trace how outcomes are generated. This challenge is particularly evident in areas like facial recognition systems, where regulatory focus is increasing due to concerns about accuracy, bias, and privacy.

Additionally, the spread of fake news generated by AI presents a significant compliance issue, as these technologies can produce convincing false content that undermines public trust and complicates enforcement efforts.

Regulatory Complexity Meets Technology Velocity

This challenge is compounded by how fast AI tech changes. Rules set today can quickly feel outdated tomorrow, causing a moving target for enforcement. Additionally, there is a risk of over-reliance on AI systems, which can lead to unforeseen vulnerabilities if organizations depend too heavily on automated processes without sufficient human oversight.

To address this, organizations are wisely building internal compliance and auditing programs that:

- Continuously monitor AI outputs for fairness and errors

- Maintain detailed documentation on data sources and model decisions

- Establish clear governance protocols and ethical review committees

These steps don’t just prepare companies for regulatory scrutiny—they also help catch risks early before issues escalate.

Transparency and Education: The Unsung Heroes

Effective enforcement relies heavily on transparency. Companies that proactively share AI design choices and testing results, including writing clear and transparent documentation, with regulators build stronger trust.

At the same time, ongoing education programs for both compliance teams and regulators help bridge expertise gaps. Regulators often lack deep technical knowledge, so open dialogue and continuous learning are essential for meaningful oversight.

Balancing Punishment and Partnership

Striking the right tone between strict enforcement and collaborative improvement defines the future of AI ethics governance.

- Punitive actions deter reckless practices and uphold public trust

- Collaborative approaches encourage innovation and shared accountability

However, as AI technologies can be used to manipulate public opinion—through deepfakes, misinformation, and other means—there is a growing need for oversight to address these risks and ensure ethical use.

One promising example: some EU member states are piloting joint industry-government task forces to review AI compliance with the EU AI Act, focusing on coaching rather than just fining.

Key Takeaways for SMBs and Startups

- Build robust internal auditing mechanisms as a default, not an afterthought

- Commit to transparent communication with regulators early and often

- Invest in cross-functional training to stay ahead of evolving compliance needs

- Consider the ethical implications of AI-powered content generation in your marketing and public relations strategies

Picture this: a startup team conducting weekly ethical audits and sharing progress reports with regulators—turning what feels like a burden into a trust-building opportunity.

Enforcement isn’t just about penalties—it’s the foundation for trustworthy AI that users and partners can rely on. Staying proactive and engaged in compliance conversations helps you avoid surprises and leads innovation, not lags behind it.

Preparing for the Future: Strategic Ethical AI Adoption for SMBs and Startups

Integrating AI ethics early doesn’t have to slow you down. Choosing the right words when communicating about AI ethics is crucial, as language shapes understanding and trust. Startups and SMBs can embed responsible AI practices while maintaining speed by focusing on clear, actionable steps from day one.

Move Fast, Stay Fair

To get ahead without sacrificing ethics, prioritize:

- Bias detection and mitigation tools, including predictive analytics, that fit your tech stack

- Embedding transparency checkpoints in your development cycle

- Setting up lightweight but effective governance programs with cross-functional teams

Picture this: your dev sprint includes a quick bias audit, not as a hurdle, but as a smart risk reducer that prevents costly rework or public blowback.

Ethics as Your Competitive Edge

Ethical AI practices build more than compliance—they create trust. Customers want to know you’re accountable and transparent. The use of AI tools that support ethical practices, such as bias mitigation, transparent auditing, and responsible deployment, further reinforces your commitment to trustworthy AI. This can translate to:

- Higher customer retention and loyalty

- Easier navigation of evolving regulations (such as the EU AI Act rolling out through 2026)

- Stronger partnerships with investors and collaborators who value responsible innovation

Imagine a pitch meeting where your commitment to ethical AI wins over cautious decision-makers before you even demo your product.

Culture: Curiosity Meets Accountability

Ethics thrives when your team embraces :

- Continuous learning about emerging AI risks

- Open conversations about mistakes, lessons, and the importance of inclusive language

- Shared ownership of outcomes across roles

This is where your core values come to life—where curiosity and action replace “we’ve always done it this way.”

Practical Next Steps

To start, SMBs and startups should:

Conduct a quick ethical risk assessment on new AI projects, using an ai tool where possible

Invest in bias testing and explainability tools early

Designate ethics champions who drive awareness and accountability

Stay current on regulatory updates impacting your market

Diving into these steps now ensures you won’t scramble when regulations tighten or your AI models scale.

Exploring detailed guides and tools on our sub-pages can help you embed these practices without losing velocity.

Embedding AI ethics isn’t just about avoiding pitfalls—it’s about turning responsibility into a real business advantage, fueling trust, innovation, and sustainable growth.

Quotable Snippets:

- “Fast development and ethical AI aren’t enemies—you can have both with the right focus.”

- “Ethics is your startup’s secret sauce for trust, partnerships, and staying compliant.”

- “When curiosity meets accountability, AI ethics becomes part of your culture, not just a checkbox.”

Conclusion

Ethical AI isn’t just a compliance checkbox—it’s a powerful catalyst for trust, innovation, and business resilience in 2025’s fast-moving landscape. By embedding fairness, transparency, and human oversight into your AI projects, you position your startup or SMB not only to navigate complex regulations but to build lasting relationships with users and partners. Ethical responsibility should guide every stage of AI development, ensuring that innovation is balanced with accountability and moral considerations.

Your AI solutions can move quickly and do right by people—turning ethics into a genuine competitive edge that fuels growth and credibility.

Keep these actionable insights front and center:

- Integrate bias detection and transparency checkpoints early in your development cycles

- Establish clear, lightweight governance programs with cross-functional teams driving ethical accountability

- Maintain continuous human-in-the-loop oversight especially for high-impact AI decisions

- Stay proactive on regulatory updates and embed compliance as a core part of your workflow

- Foster a company culture where curiosity meets accountability to keep ethics top of mind

- Proactively address the risk that AI could be used to spread misinformation by implementing safeguards and supporting fact-checking initiatives

Start now by running a quick ethical risk assessment on your latest AI initiatives, adopting tools that reveal bias or explain decisions, and naming champions to lead ethics efforts across teams.

Taking these concrete steps transforms ethical AI from an abstract ideal into a strategic advantage that accelerates innovation and builds unstoppable momentum.

Remember: Fast development and ethical AI aren’t enemies—you can have both with the right mindset and action plan.

When curiosity meets accountability, and ethical responsibility is prioritized, your AI doesn’t just keep up with the future—it shapes it.

AI Governance In 2025: Expert Insights On Ethics, Tech, And Law and AI Governance in 2025: Navigating Global AI Regulations and Ethical Frameworks - LawReview.Tech provide further expert perspectives on these evolving trends.