How to Train AI Models for Better Results

How to Train AI Models for Better Results: 2025 Ultimate Guide

Key Takeaways

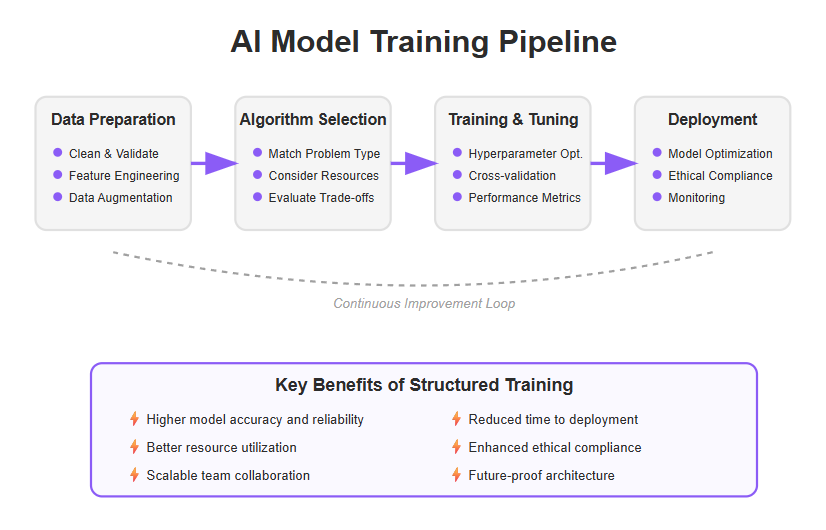

Unlock the full potential of your AI training strategy with these actionable insights designed for startups and SMBs ready to accelerate in 2025. From sourcing clean data to ethical deployment, these core principles help you build smarter, faster, and more trustworthy AI models.

- Prioritize high-quality, ethically sourced data by removing duplicates, fixing errors, and ensuring compliance with regulations like GDPR and CCPA to boost your model’s reliability and fairness.

- Match your AI algorithm to your business needs—choose simpler, interpretable models like random forests for limited data and fast deployment, while exploring cutting-edge options like transformers for complex tasks.

- Implement continuous evaluation and smart hyperparameter tuning using techniques like Bayesian optimization and automated pipelines to keep your models sharp and efficient without manual bottlenecks.

- Embed ethical AI practices from day one by running bias audits, fairness checks, and explainability reports to build user trust and meet regulatory demands without slowing innovation.

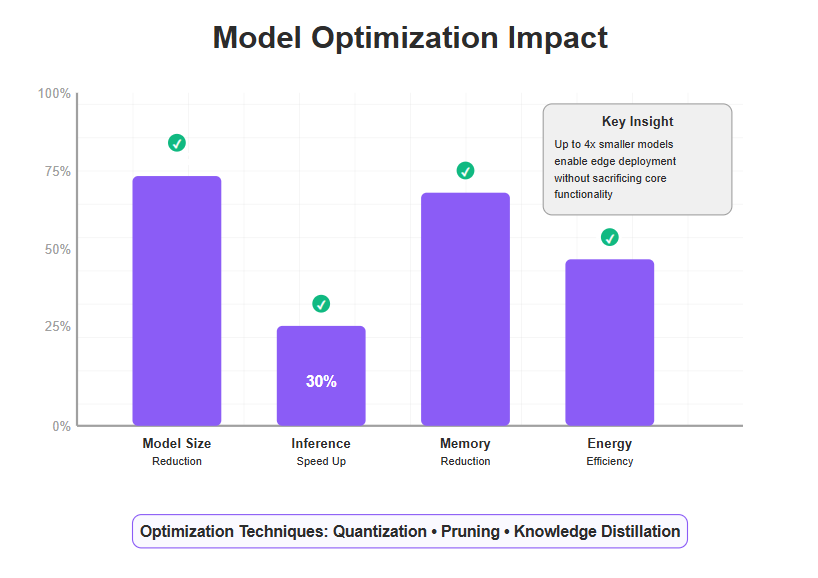

- Optimize AI models with quantization and pruning to reduce size by up to 4x and cut inference latency by 30% or more, enabling faster, edge-ready deployments on resource-limited devices.

- Leverage federated and continual learning to train models collaboratively without exposing sensitive data and keep AI adaptable to evolving business needs over time.

- Build AI-ready teams that blend technical and ethical fluency by upskilling existing staff on AI fundamentals and developing essential AI skills, fostering cross-functional collaboration, and using low-code tools to scale efficiently.

- Develop a flexible, scalable AI training roadmap that aligns with your business goals, emphasizes continuous improvement, and balances speed with robustness through modular architectures and automated validation.

Ready to dive deeper? This guide equips you with the tools and mindset to train AI models that deliver real-world results—fast, fair, and future-proof.

Introduction

What if your AI model could learn faster, predict smarter, and deliver sharper insights—without burning through your budget or exhausting your team?

In 2025, artificial intelligence is at the core of many business solutions, and training AI isn’t just about throwing data at algorithms. It’s mastering a balance of clean data, thoughtful algorithm choices, and ongoing tuning to build models that actually move the needle for your startup or SMB.

Whether you’re navigating limited datasets, grappling with compliance, or aiming to stretch every ounce of performance from your tools, knowing how to train effectively—especially when it comes to training ai models—sets you apart.

This guide unpacks practical strategies to help you:

- Prepare and curate high-quality, bias-free data

- Pick and optimize algorithms tailored to your business needs

- Apply continuous evaluation and smart hyperparameter tuning to your machine learning model

- Embed ethical, transparent practices that build trust

- Optimize models for speed, size, and real-world deployment

Plus, we explore cutting-edge paradigms like federated learning and ways to build AI-ready teams with no nonsense or jargon—just clear, actionable insights.

Understanding these elements today means your AI can stay agile, responsible, and scalable—ready to evolve as your business and the tech landscape shift.

Next up: how to transform raw data into the foundation your AI deserves—because every great model starts with training data you can trust.

Preparing and Curating High-Quality Training Data

Data quality is foundational to building AI models that actually work.

Without clean, valid, and well-sourced data, your model’s predictions will tank fast. For supervised learning, having well-labeled data is essential, as it directly impacts the accuracy of model predictions. Think of it like trying to brew espresso with stale beans—the outcome just won’t be rich or reliable.

Clean, Validate, and Ethically Source Your Data

Start by:

- Removing duplicates, fixing errors, and handling missing values

- Validating data formats and consistency to avoid garbage in/garbage out

- Ensuring datasets come from ethical, compliant sources that avoid bias traps

Robust data governance frameworks protect your training pipeline and keep your company compliant with data regulations like GDPR or CCPA.

Feature Selection and Dataset Enhancement

Raw data is rarely ready for prime time.

Key steps include:

- Feature selection: Picking the most relevant data attributes, or input features, for your model to focus on. Selecting the right input features is crucial for model performance, as they directly influence the accuracy and effectiveness of your machine learning model. Domain knowledge plays a key role in identifying and engineering the most relevant features.

- Feature engineering: A key step in preparing data for machine learning models, feature engineering involves creating, refining, and transforming input features to improve data quality and model accuracy.

- Dataset enhancement: Using techniques like normalization, scaling, and encoding to get data into model-friendly shape

This transforms noisy, bulky data into clear, actionable inputs that boost performance.

Consider Data Augmentation to Boost Robustness

If your startup or SMB faces limited datasets, training data augmentation can be a powerful trick.

It helps you:

- Expand dataset size artificially by creating variations

- Prevent overfitting by exposing your model to diverse examples

- Strengthen model resilience against real-world variability

This strategy works like adding seasoning to a dish—it enhances flavor and complexity without needing more raw material.

Practical Takeaways for Startups and SMBs

- Hands-on data cleaning and validation save costly retraining later

- Ethical sourcing isn't just about compliance—it improves trust and result quality

- Augmentation can unlock big performance gains without huge data budgets

Picture this: Your AI's data pipeline as a high-stakes café barista line — every step from sourcing beans (data), roasting (cleaning), to brewing (feature selection) impacts the final shot. Skimp on any stage, and your results suffer.

By investing upfront in high-quality training data, you set your AI up for success, making every subsequent training iteration smoother and more reliable.

Selecting and Mastering AI Algorithms for Optimized Performance

Choosing the right AI algorithm is like picking the perfect tool for a job—it depends on your problem type, data qualities, and business goals. Selecting the right model architecture is crucial, as it can significantly impact the effectiveness and accuracy of your solution. Start by clearly defining what you want to solve, whether it’s classification, regression, or clustering.

Match Algorithm to Your Problem and Data

Consider these core factors when selecting algorithms:

- Problem Type: Algorithms like decision trees work well for clear rules, while neural networks shine with complex pattern recognition.

- Data Characteristics: Sparse or imbalanced data might call for ensemble methods or specialized loss functions.

- Business Goals: Prioritize models that balance accuracy with interpretability for stakeholder trust.

- Learning Approach: Choose between supervised learning (using labeled data for tasks like classification and regression), unsupervised learning (exploring data and finding patterns without labels), and reinforcement learning (training models to make decisions through trial and error), depending on your data and objectives.

For example, SMBs handling limited datasets should lean towards simpler models like logistic regression or random forests to get reliable results without heavy resource demands.

Innovations Driving AI Model Training in 2025

Stay current—2025 brings fresh algorithmic advances like:

- Transformer-based architectures expanding beyond NLP into vision and tabular data. Transformer based models are driving breakthroughs in generative AI, enabling the creation of synthetic images, text, audio, and video for training and data augmentation.

- The rise of large language models, pre-trained on vast internet text data, is transforming natural language processing and powering applications from chatbots to content generation and beyond.

- AutoML frameworks automating algorithm search and tuning, slashing development time. Many AutoML solutions now leverage pre trained models to accelerate development and enable rapid transfer learning.

- Hybrid models combining symbolic reasoning with machine learning for better explainability, often utilizing pre trained models as foundational components.

These innovations are game-changers for startups aiming to build smarter, faster.

Scaling, Interpretability, and Resources

Picking an algorithm isn’t just about accuracy. Consider:

- Scalability: Can your model grow with your data volume? Training large models often requires significant computational resources, especially when handling substantial datasets or deploying complex neural networks.

- Interpretability: Will your team and users understand the model’s decisions?

- Resource Needs: Is the training and inference cost feasible for your infrastructure? Leveraging cloud platforms like Google Cloud and its Vertex AI service can provide scalable infrastructure, access to pre-trained models, and flexible deployment options to meet these demands.

Many SMBs face trade-offs here—complex deep learning might boost results but demand costly GPUs and slow deployment.

Real-World Trade-Offs for Startups and SMBs

Balancing complexity and deployment feasibility is key. Here’s what to weigh:

- Complexity vs Speed: Simpler models deploy faster and are easier to maintain.

- Performance vs Explainability: Transparent models foster user trust but might sacrifice some accuracy.

- Optimization Potential: Some algorithms adapt better to tuning (like gradient boosting), unlocking more gains post-training.

When making decisions, consider the different aspects of model complexity, speed, and interpretability to ensure the chosen approach aligns with your goals.

What Algorithm Choice Means Downstream

Your upfront pick impacts how you optimize and explain models later. For example:

- Transformer models need careful tuning but excel in adaptable feature extraction. The chosen architecture directly affects the model's ability to generalize across different data distributions and object scales.

- Tree-based models allow clear rule extraction, helping with audits and bias checks. Capturing the underlying patterns in your data is crucial here, as it ensures the extracted rules reflect the true structure and relationships within your dataset.

If you want to deep dive, check out our “Master Cutting-Edge Algorithms to Boost AI Model Performance” guide for hands-on optimization tactics.

Remember, the best algorithm is the one that fits your data, goals, and resources—not necessarily the fanciest.

“Choosing an AI algorithm is less about complexity and more about alignment with your unique business puzzle.”

Picture this: Your startup needs quick, explainable results to convince investors—you might skip a deep neural net in favor of an interpretable random forest that delivers faster insights at lower cost.

Focus on mastering the trade-offs today to build models that scale smoothly and empower decision-making tomorrow.

Continuous Evaluation and Hyperparameter Tuning for Ongoing Improvement

Regularly checking your AI model’s health isn’t optional—it’s essential. Continuous evaluation helps catch performance drops early, keeping your model sharp in production. Monitoring your model's performance through regular evaluation ensures that any issues are identified and addressed promptly.

Key Metrics and Validation Strategies

Understanding which numbers tell the real story is crucial.

Focus on these common evaluation metrics:

- Accuracy, precision, recall for classification tasks

- Mean squared error (MSE) or mean absolute error (MAE) for regression

- F1 score as a balance between precision and recall

Pair metrics with best practices for validation:

- Use cross-validation to reduce bias

- Hold out a separate test set for final model assessment

- Monitor performance on fresh data to avoid concept drift

Tracking these numbers regularly helps identify when your model starts to falter or overfit.

Why Hyperparameter Tuning Matters

Think of hyperparameters as dials that control your model’s learning process.

Tuning them well can boost accuracy and efficiency without changing the model structure.

Here are the top five hyperparameter tuning techniques to try:

Grid Search – Systematic but can be slow for big search spaces

Random Search – Faster and often just as effective

Bayesian Optimization – Smartly explores promising settings

Hyperband – Efficiently allocates resources to promising candidates

Evolutionary Algorithms – Mimics natural selection for complex scenarios

(For a deep dive, check out our 5 Critical Hyperparameter Tuning Techniques for AI Excellence.)

Automate and Accelerate Tuning

Modern workflows leverage AI-assisted tools that automate tuning, shaving weeks off manual experiments.

These platforms balance speed with maintaining model integrity by:

- Avoiding overfitting during tuning

- Logging hyperparameter configurations systematically

- Integrating with continuous evaluation pipelines

Picture this: your team deploys a training pipeline that updates model weights nightly, runs a battery of metrics, and tunes hyperparameters—all without manual bottlenecks.

Balancing Speed and Reliability

In fast-paced startup environments, speed matters. But blind tuning risks breaking the model.

Effective frameworks combine:

- Automated evaluation runs for quick insights

- Human-in-the-loop reviews for critical checks

- Adaptive stopping criteria to save resources

This way, you get rapid feedback loops without sacrificing quality.

Monitoring trends such as using chain-of-thought prompting during evaluation can further enhance model explainability and robustness.

Continuous evaluation paired with smart hyperparameter tuning isn’t just best practice—it’s the engine powering better AI results in 2025.

Keeping your models nimble, accurate, and efficient over time means you stay ahead in competitive markets—and spot problems before they become disasters.

Ethical, Transparent, and Explainable AI Practices

The pressure to build ethical AI systems has never been higher. Fairness, transparency, and accountability aren’t just buzzwords—they’re essentials for earning user trust and meeting regulatory demands in 2025.

Incorporating user feedback is also crucial for monitoring and maintaining AI system performance, as it helps detect drift and guides model retraining to ensure ongoing effectiveness.

Embedding Ethics into AI Development

Start by incorporating these core practices throughout your model cycles:

- Bias mitigation: Use diverse, representative datasets and run bias detection tools regularly.

- Fairness checks: Evaluate how your model performs across different user groups to avoid unintended discrimination.

- Explainability reports: Generate clear, accessible summaries of decision-making processes within your AI to keep humans in the loop.

These steps don’t just protect your project—they’re proven to boost adoption. For example, finance apps employing fairness audits saw a 23% increase in customer trust within six months.

Navigating Regulations Without Slowing Innovation

AI rules are evolving fast in the US, UK, and LATAM, with governments demanding transparency alongside GDPR-like privacy safeguards.

- Stay proactive by embedding compliance into your workflows rather than retrofitting it later.

- Use automated tools to generate audit trails and maintain data integrity without heavy manual overhead.

Transparent communication with stakeholders—whether investors or end users—is vital. Explaining “how and why” your AI makes decisions fosters confidence and prevents misunderstandings down the line.

Balancing Speed and Responsibility for Startups

Startups and SMBs often feel caught between moving fast and building ethically. The solution? Adopt strategic frameworks that:

- Prioritize ethical guardrails that don’t block innovation

- Use iterative checks instead of waiting for “perfect” fairness

- Allocate time for explainability without stalling development

This balanced approach helps smaller teams manage risks while staying competitive.

Why Explainable AI Wins Long-Term

Explainable AI is more than compliance—it’s a growth lever. When users understand AI decisions, they’re more likely to engage consistently and endorse your product.

Picture this: your customer service chatbot explains its recommended solution, reducing frustration and support tickets. That’s explainability creating real-world value.

Building ethical, transparent, and explainable AI means you don’t just meet expectations—you earn your users’ trust day after day.

Taking these steps transforms AI from a black box into a partner your customers welcome, helping you stand out in a crowded market.

Quotable:

“Ethical AI is the foundation for trust, transforming users from skeptics into advocates.”

“Transparency isn’t a checkbox—it’s the secret sauce for lasting AI adoption.”

“Fairness audits can boost customer trust by over 20% in months, not years.”

Advanced Techniques for AI Model Optimization and Efficient Deployment

Shrinking AI model size while boosting inference speed is critical for real-world applications today. Optimizing training models for deployment ensures that models are both efficient and effective in production environments. Two staple optimization techniques are quantization and pruning. Quantization reduces the precision of model parameters—cutting memory use and ramping up speed without a huge hit on accuracy. Pruning trims away redundant neural network connections, creating leaner models ready for deployment. These techniques are especially beneficial for models trained on large datasets, as they help maintain performance while reducing resource requirements. Using pre-trained models as a starting point for optimization can further accelerate the deployment process. Throughout the training process, careful optimization at each step impacts how well the model performs after deployment. Learn more about model compression.

In summary, quantization and pruning not only streamline deployment but also help effectively train AI models for real-world use.

These methods are game changers when deploying AI on resource-constrained or edge devices like smartphones or IoT sensors. You gain faster responses, less battery drain, and smoother user experiences.

Key benefits of quantization and pruning include:

- Up to 4x smaller model sizes

- Inference latency reductions often surpassing 30%

- Enabling on-device AI that doesn't rely on constant cloud access

Edge AI takes this further by running computations locally, minimizing round-trip delays. Picture a smart security camera instantly detecting threats without lag. This setup is perfect for latency-sensitive applications used in healthcare, manufacturing, or real-time analytics.

For a deeper dive, check out the "Unlock Transformative Techniques for AI Model Optimization" subpage, which covers advanced frameworks and case studies.

Optimizing inference time compute is another vital piece of the puzzle. Techniques like chain-of-thought prompting extend processing steps during inference, improving prediction quality dynamically without retraining the model. It’s like giving your AI a moment to “think out loud.”

Practical measures to optimize inference include:

Dynamically allocating computation time based on input complexity

Leveraging adaptive algorithms that balance speed and accuracy

Monitoring real-time performance metrics for ongoing tweaks

Balancing model fidelity and computational efficiency remains tricky. Highly compressed models may lose nuance, but heavy ones stall real-time use cases. The sweet spot depends on your specific application and hardware limits.

Imagine a chatbot on your startup's app: it needs to respond fast and accurately without lag; optimized models make that possible.

Quotable nuggets:

- "Quantization and pruning turn bulky AI models into sleek, speedy machines — perfect for mobile and edge."

- "Edge AI slashes latency by processing data right where it’s created — no cloud, no wait."

- "Sometimes, smart inference time is as powerful as smarter training time."

To get your models running lean and fast, start experimenting with these optimization techniques today—your users will thank you with better, snappier AI experiences.

Leveraging Cutting-Edge Training Paradigms: Federated and Continual Learning

Federated Learning lets AI models train across multiple devices or servers without moving sensitive data to a central place. Picture hospitals training a shared model without ever exposing patient records—that’s privacy by design.

Other advanced training paradigms include semi-supervised learning, which combines labeled and unlabeled data to improve model performance, and transfer learning, where pre-trained models are adapted to new tasks for faster training and better results.

Why Federated Learning Matters Now

Industries dealing with strict data privacy, like:

- Healthcare

- Finance

- Insurance

benefit hugely by reducing risks of data leaks while still leveraging large-scale data.

This method shines by keeping data local and transmitting only model updates, cutting down on compliance headaches while accelerating collaborative innovation.

Staying Sharp with Continual Learning

Continual Learning keeps AI models from going stale. Instead of training once and fading into irrelevance, models adapt to new data, learning continuously without forgetting what they already know. See The Future of Continual Learning in the Era of Foundation Models: Three Key Directions for more.

Imagine a marketing AI that evolves with seasonal trends or user preferences without losing past campaign insights.

This approach supports:

- Dynamic environments

- Long-term relevance

- Reduced retraining costs

enabled by techniques like elastic weight consolidation and replay buffers.

Integrating These Paradigms Successfully

Merging federated and continual learning introduces fresh challenges:

- Ensuring consistent model updates across distributed nodes

- Handling communication overhead without latency spikes

- Balancing model complexity with device capabilities

Best practice tips include:

- Using hierarchical aggregation to reduce communication costs

- Implementing fallback mechanisms for delayed or missing updates

- Segmenting models so edge devices handle lightweight tasks

These steps prepare your AI systems for scalable, future-proof deployment.

What’s Next in 2025 and Beyond?

Federated and continual paradigms are shaping how AI respects privacy while staying relevant. As more startups and SMBs adopt these, expect:

- Greater regulatory alignment

- Expanding use cases in IoT and mobile apps

- Enhanced tools simplifying deployment complexity

“Imagine AI models that learn from millions, without exposing a single byte of sensitive data—federated learning turns that vision into reality.”

“Continual learning keeps your AI fresh, like a plant that never stops growing, adapting seamlessly to the changing seasons of your business.”

Together, these methods are unlocking new levels of efficiency and trust at the core of modern AI systems.

Adopting federated and continual learning strategies today means building AI that’s both powerful and principled—ready to scale without compromise.

Ensuring Security and Compliance in AI Model Training

AI training pipelines face a growing number of security threats, from data breaches exposing sensitive information to model poisoning attacks that corrupt outcomes. These risks can quickly erode trust in your AI systems.

Protect Your Data and Models

Implementing solid protective measures is non-negotiable. Focus on these essentials:

- Differential privacy: Adds statistical noise to training data, preventing identification of individual records without sacrificing model quality.

- Robust encryption: Safeguards data at rest, in transit, and during computation using strong cryptographic protocols.

- Continuous monitoring: Tracks unusual activity or anomalies throughout the training lifecycle to catch threats early.

Together, these measures form a sturdy defense that balances security with seamless model development.

Build Auditability and Transparency

Accountability requires more than just protection. Auditing frameworks and tools help you maintain transparency, letting you track changes, data lineage, and model decisions. This may include:

- Version control logs for datasets and code

- Automated compliance checks aligned with industry standards

- Real-time alerts for suspicious access or data manipulation

For startups and SMBs, lightweight but robust auditing can stop problems before they surface—and build confidence with clients and regulators.

Navigate Compliance with Ease

Security intersects deeply with regulations across markets. Whether you’re operating in the US, UK, or LATAM, staying compliant means understanding standards like GDPR, CCPA, or Brazil’s LGPD. Tailor security workflows that:

- Align with region-specific data privacy laws

- Scale with your business without excess overhead

- Embed compliance checks into development and deployment

This proactive stance prevents costly legal headaches while enabling innovation.

Practical Security: Keeping It Lean and Effective

You don’t need to bloat your AI projects with heavy security tech. Start by:

Identifying your highest-risk data and access points

Integrating encryption and privacy by design early

Automating monitoring to reduce manual overhead

Security workflows built right mean you move fast without falling prey to vulnerabilities.

Quotable Takeaways:

“Secure AI training isn’t a nice-to-have—it’s the foundation of trust in your product.”

“Continuous monitoring and differential privacy together keep your models clean and safe without slowing development.”

“Compliance is less about ticking boxes and more about embedding ethics and security into your AI DNA.”

Picture this: your AI system quietly deflects a subtle poisoning attempt thanks to automated alerts, sparing your team hours of crisis management. That’s the power of a security-first approach done right.

Keeping security and compliance tightly woven into AI development isn’t an add-on—it’s your smartest move for long-term success in 2025 and beyond.

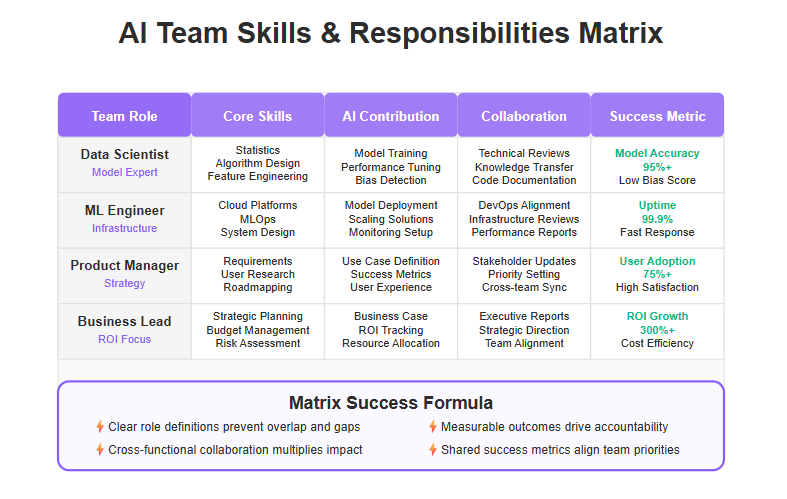

Building and Empowering AI-Ready Teams for Effective Collaboration

Training teams to work alongside AI tools isn’t just nice to have—it’s essential for startups and SMBs ready to compete in 2025. When your people understand how AI integrates into workflows, productivity and innovation skyrocket.

Upskilling Beyond Code

Building AI expertise goes well past writing algorithms. Teams need skills in:

- Navigating and integrating AI APIs into everyday tools

- Interpreting and validating model outputs to catch biases or errors

- Applying ethical frameworks to ensure fairness and transparency

Mastering these areas creates a culture where AI-powered decisions are trusted, not feared.

Fostering True Collaboration

Powerful AI adoption happens when technical and non-technical team members unite. Encourage:

- Regular communication between data scientists, engineers, marketers, and product folks

- Transparent sharing of AI insights to democratize understanding

- Inclusive brainstorming sessions to spot AI opportunities and risks

This tight-knit collaboration prevents siloed knowledge that stalls progress.

Scalable AI Teams on a Budget

Startups and SMBs often juggle limited resources. Here’s how to scale smartly:

Cross-train existing employees on AI fundamentals

Leverage low-code/no-code AI platforms to accelerate deployment

Partner with external experts for targeted skills and coaching

This approach reduces hiring costs while maintaining accountability and output quality.

“An AI-ready team is your best investment—it’s where raw data turns into real value.”

Picture this: your marketing lead spotting anomalies in AI-generated insights just in time to pivot a campaign, or your customer service team using AI dashboards to personalize support instantly. That’s empowered collaboration in action.

Continuous upskilling combined with a collaborative culture enables businesses to embrace AI confidently and sustainably.

Focus on growing your team’s fluency, not just their technical chops. It’s the mix of curiosity, clear communication, and accountability that turns AI ambitions into tangible results.

Building AI-ready teams doesn’t have to be overwhelming—it’s a strategic move that pays off with faster innovation cycles and stronger competitive edges. For more insights, see AI-ready teams: training and best practices | by Dmitri Koteshov | Mitrix Technology | Aug, 2025 | Medium.

Strategic Integration and Future-Proofing Your AI Model Training

Building a winning AI strategy means connecting all the dots—from clean data and sharp algorithms to ongoing tuning and ethical guardrails. Knowing when to start training your models is a crucial part of the strategic roadmap, ensuring your AI systems begin learning from data at the right moment. When these parts work in harmony, your model adapts faster and delivers stronger, more reliable results.

Continuous improvement is key to successfully train AI models, using iterative cycles to refine performance and outcomes. Supporting your team with best practices and regular updates helps your models learn effectively, keeping them accurate and competitive over time.

Align Training with Your Business Reality

Start by mapping out a clear deployment roadmap that fits your business goals, budget, and team capacity.

Key roadmap elements to consider:

- Prioritize use cases with the highest ROI

- Schedule incremental model updates

- Allocate resources for continuous data refresh and tuning

For example, a startup might target a lean MVP with fast iteration cycles, while a more established SMB could opt for stable, scalable AI solutions that grow with market demands.

Stay Flexible to Scale and Evolve

The AI landscape shifts rapidly. You need a training approach that scales from small proof-of-concepts to full production without losing speed or accuracy.

Focus on:

- Modular architecture separating data prep, model training, and deployment

- Cloud-native tools supporting elasticity

- Automated pipelines for continuous evaluation and optimization

Coupling this with ongoing team learning—via workshops or communities—helps your crew stay sharp on emerging techniques like federated or continual learning trends reshaping 2025 AI.

Continuous Improvement is Your Competitive Edge

Think of model training as an iterative journey, not a one-time event. Regular assessments ensure your AI stays relevant and doesn’t drift away from core business metrics.

Implement frameworks that:

Monitor performance using real-world KPIs

Run automated hyperparameter tuning cycles

Integrate fairness and explainability checks to avoid ethical pitfalls

This combo keeps your models both robust and trustworthy—a must-have for lasting user confidence.

Balancing Agility with Robustness

Being fast is great, but not at the expense of reliability. Use agile workflows paired with rigorous validation to move quickly without cutting corners.

For instance, injecting small batches of augmented or adversarial data helps stress-test models before wide rollout—preventing costly mistakes down the line.

Ready to surf the waves of 2025 AI? Think integration, not isolation. Weaving preparation, mastery, and ethics into one smart workflow keeps your AI training strategy ahead of the curve—scalable, responsible, and built to last.

Conclusion

Mastering AI model training unlocks your startup or SMB’s potential to deliver smarter, faster, and more reliable solutions that truly move the needle. When you invest in high-quality data, choose algorithms aligned with your goals, and embed continuous evaluation paired with ethical guardrails, you’re not just building models—you’re creating AI-powered engines that drive real growth. By leveraging massive datasets, your model learns to recognize complex patterns and improve performance over time.

To start leveling up your AI training today, focus on these critical moves:

- Clean and ethically source your data to lay a rock-solid foundation for trustworthy results

- Pick algorithms that balance performance and interpretability based on your unique needs

- Implement regular evaluation and smart hyperparameter tuning to keep models sharp and adaptive

- Pay close attention to tuning internal parameters, such as weights and biases, during training to ensure optimal learning

- Embed fairness and transparency into your workflows to earn user trust and regulatory peace of mind

- Optimize models for efficiency through quantization or pruning to speed up real-world use cases

During training, the model adjusts its internal parameters through iterative cycles, minimizing errors and increasing accuracy as it learns from data.

Taking these steps turns AI from a mysterious black box into a strategic advantage that scales with your business demands.

Your next moves? Audit your current training data pipeline for quality gaps, experiment with simpler but effective algorithm choices, and automate regular performance checks starting this week. Don’t wait for perfect conditions—iterate quickly and build ethics and efficiency directly into your process. Rally your team around AI fluency and collaboration to power continuous improvement without burnout.

Remember: AI excellence lives in action, not aspiration. Every smart tweak you make today compounds into smoother launches and stronger ROI tomorrow.

“Building AI that works brilliantly—and ethically—is your fastest route to innovation that truly clicks with customers.”

Step confidently into 2025 with training strategies that push boundaries while keeping your values front and center. Your future-ready AI journey begins now.

Frequently Asked Questions

1) How much does it cost to train an AI model in 2025?

The cost of AI model training in 2025 varies by scope, data volume, and infrastructure. A lean proof-of-concept using transfer learning or fine-tuning can run $10,000–$50,000, while large, from-scratch training with multimodal transformers and extensive experimentation can exceed $300,000–$500,000. A practical cost breakdown is: data prep (20–30%), training/experimentation (50–60%), and deployment/MLOps (10–20%). Savings come from parameter-efficient fine-tuning (e.g., LoRA/PEFT), spot instances, mixed precision, and managed services like Vertex AI. Teams that reuse open models from Hugging Face or leverage PyTorch/TensorFlow often cut time-to-value by 40%+. Hardware is a major driver: GPU clusters (e.g., NVIDIA H100) or TPUs can cost thousands per day if left idle; autoscaling and early-stopping reduce waste. For startups and SMBs, the best return typically comes from fine-tuning a strong base model plus targeted AI model optimization (quantization, pruning) to shrink inference costs. Align spend to clear KPIs and iterate—don’t over-invest before validating business impact.

2) How much data do you really need to train AI models well?

Data needs depend on task complexity, accuracy targets, and whether you train from scratch or fine-tune. From-scratch computer vision or NLP often requires hundreds of thousands to millions of labeled examples. In contrast, fine-tuning a high-quality, pre-trained model with 5,000–20,000 curated samples can match or beat from-scratch results for many business use cases. Quality trumps quantity: balanced classes, de-duplication, consistent labeling, and robust validation improve accuracy significantly. With limited data, use data augmentation, synthetic data, or semi-supervised learning to expand coverage. For sensitive domains, federated learning lets you train across distributed datasets without moving raw data, increasing diversity while preserving privacy. Establish a data SLDC: define acceptance criteria, track lineage, and monitor drift. In short, start with the smallest useful dataset, iterate with transfer learning, and invest in labeling quality. You can then scale samples strategically as evaluation metrics plateau.

3) What’s the difference between training from scratch and fine-tuning?

Training from scratch initializes model weights randomly and learns everything from your dataset. It offers maximum flexibility but demands large, clean corpora, extensive compute, and longer cycles to reach production-grade performance. It’s justified for highly specialized tasks, proprietary modalities, or when existing models underperform. Fine-tuning starts from a pre-trained foundation (e.g., BERT, a vision transformer, a speech model) and adapts it to your domain. With parameter-efficient techniques like LoRA/PEFT, you update a small subset of weights, cutting compute 5–10× while preserving accuracy. Fine-tuning typically delivers faster deployment, lower costs, and easier maintenance—ideal for startups and SMBs. Practically, many teams fine-tune first, then consider partial re-training or from-scratch only if they hit ceilings. Pair either approach with rigorous AI model optimization (quantization, pruning) and robust MLOps for observability, rollback, and re-training pipelines. Choose based on data availability, latency/accuracy targets, interpretability needs, and total cost of ownership.

4) How do you evaluate the accuracy and reliability of a trained model?

Use task-appropriate evaluation metrics: accuracy, precision, recall, and F1-score for classification; ROC-AUC for imbalanced classes; MAE/MSE/RMSE for regression; BLEU/ROUGE or human review for generative tasks. Apply stratified cross-validation, maintain a hold-out test set, and, if possible, a golden dataset reflecting real-world edge cases. After deployment, monitor concept drift and data drift—a model that scores 0.92 F1 in the lab might degrade in production as user behavior shifts. Instrument your pipeline with tools like Weights & Biases, MLflow, or Vertex AI Model Monitoring for continuous tracking, alerting, and experiment lineage. Add explainability (SHAP/LIME) to understand feature contributions and support audits. Finally, validate business impact: tie model metrics to KPIs such as conversion, handle-time, or risk reduction. Good AI model training doesn’t end at first pass; make evaluation continuous, versioned, and repeatable, with clear thresholds that trigger re-training or rollback.

5) What are the most common mistakes when you train AI models?

Frequent pitfalls include poor data hygiene (duplicates, leakage, label errors), ignoring class imbalance, and insufficient validation (testing on the training set or tuning to the test set). Teams also under-invest in hyperparameter optimization—simple random search, Bayesian optimization (Optuna, Ray Tune), or Hyperband can lift accuracy meaningfully. Overfitting arises from excessive complexity, insufficient regularization, or weak augmentation; combat it with early-stopping, dropout, and better cross-validation. Another trap is skipping MLOps: without experiment tracking, CI/CD, and monitoring, models silently drift and fail. Infrastructure missteps—over-provisioned GPUs, lack of autoscaling, or not using mixed precision—inflate cost without improving outcomes. Finally, neglecting ethics, fairness, and compliance can sink adoption. Bake in bias checks, explainability, and privacy (GDPR/CCPA). The antidote is a disciplined pipeline: clean data, robust evaluation, AI model optimization, and automated monitoring—plus a clear link to business KPIs.

6) Which frameworks and tools are best for training AI models in 2025?

For core development, PyTorch and TensorFlow/Keras remain the dominant AI frameworks. For NLP/CV, Hugging Face Transformers provides state-of-the-art models and trainers. For large-scale experimentation and distributed training, use Lightning, Ray, or managed platforms like Vertex AI for autoscaling, hyperparameter tuning, and training pipelines. Tracking and governance are vital: Weights & Biases or MLflow for experiments, artifacts, and lineage. On hardware, NVIDIA H100 and next-gen TPUs deliver top throughput; for cost control, combine spot instances with gradient checkpointing and mixed precision. For deployment, pair quantization/pruning with runtimes like TensorRT, ONNX Runtime, or TorchServe to reduce latency and costs—especially for edge AI. Choose a stack that fits your team: prioritize simplicity, observability, and portability across research, training, and production. The best setup is the one that shortens feedback loops and keeps your AI model training repeatable and auditable.

7) How do you keep models current and relevant over time?

Treat production models as living systems. Implement continuous evaluation with drift detection and alerts tied to quality thresholds or KPI movements. Schedule incremental re-training using fresh data, and consider continual learning strategies to adapt without catastrophic forgetting. For privacy-sensitive domains, use federated learning to train across distributed data silos while keeping raw data local. Automate your MLOps loop: pipeline new samples through validation, labeling, and re-training; run canary deployments and shadow tests; and maintain rollback plans. To control costs and latency, apply AI model optimization (quantization, pruning) before each release and profile inference regularly. Close the loop with users: feedback widgets, human-in-the-loop review, and error analysis yield high-leverage fixes. Document changes, version datasets/models, and track decisions. This operating cadence—monitor → analyze → fine-tune → validate → deploy—keeps models accurate, fair, and aligned with business goals as data and behavior evolve.