7 Real-World Use Cases for AI Agents in Modern Full-Stack Teams

7 Real World Use Cases for AI Agents in Modern Full Stack Teams

Meta Description: Discover 7 real-world use cases for AI agents in modern full-stack teams that showcase how intelligent, autonomous systems are transforming development workflows – from coding and testing to DevOps, documentation, and project management – helping teams build better software faster.

Outline:

Introduction – Introduce AI agents in modern full-stack teams and present the 7 real-world use cases for AI agents in modern full-stack teams to be discussed, highlighting their growing impact on productivity.

What are AI Agents in Full-Stack Teams? – Define AI agents in a software development context and how they function as “virtual team members” integrated into development tools.

Benefits of AI Agents for Full-Stack Development – Explain why full-stack teams are adopting AI agents: faster delivery, reduced repetitive work, improved code quality, and ability to catch issues early.

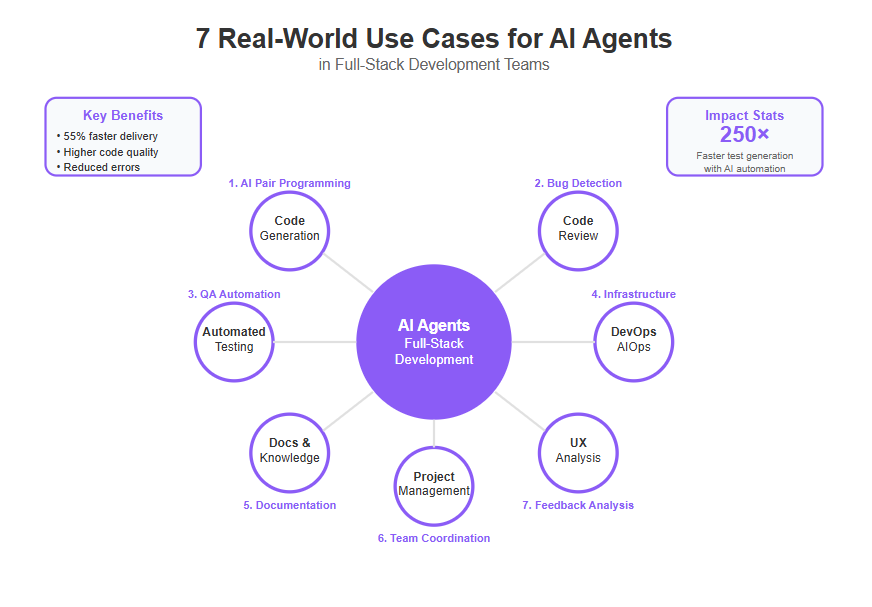

Use Case 1: AI-Powered Code Generation and Pair Programming – How AI coding assistants (e.g. GitHub Copilot) help developers write code faster and with less mental strain, acting as smart pair programmers.

Use Case 2: Intelligent Code Review and Bug Detection – AI-driven code review tools that automatically catch bugs, enforce standards, and suggest fixes (e.g. DeepCode, SonarQube) to improve code quality.

Use Case 3: Automated Testing and Quality Assurance – AI agents that generate unit tests and test data, boosting test coverage and reliability while saving developers time (e.g. Diffblue Cover generating tests 250× faster than manual coding).

Use Case 4: AI-Assisted DevOps and Infrastructure Management – Applying AI (AIOps) for monitoring, anomaly detection, and automated incident response in DevOps pipelines, resulting in fewer outages and optimized resource use.

Use Case 5: AI for Documentation and Knowledge Management – AI tools that automatically generate and update documentation (code comments, API docs, internal wikis), keeping knowledge bases up-to-date and reducing “documentation drift”.

Use Case 6: AI in Project Management and Team Coordination – Virtual project manager agents that schedule meetings, track tasks, send reminders, and summarize discussions, helping full-stack teams stay organized and efficient.

Use Case 7: AI-Driven User Experience and Feedback Analysis – Utilizing AI to analyze user feedback and usage data for product improvements, and even assist in UI/UX design prototyping, enabling data-driven decisions for a better user experience.

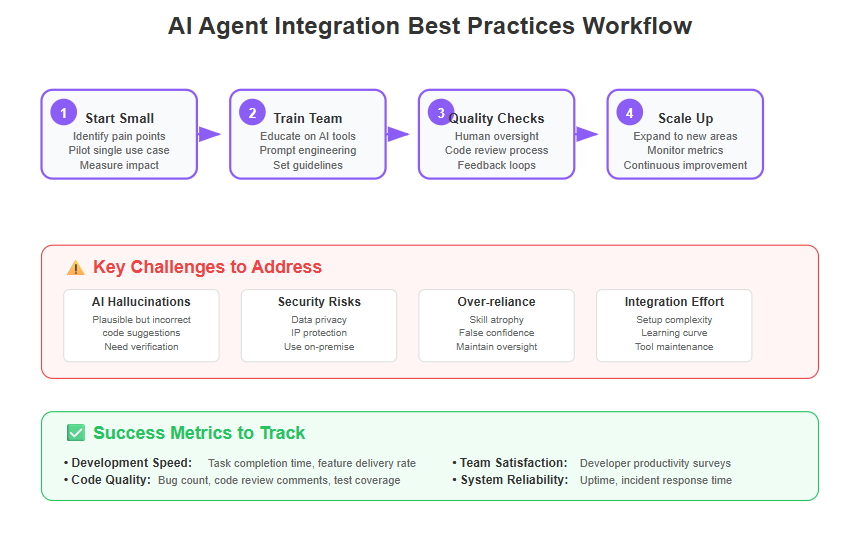

Challenges and Considerations when Using AI Agents – Discuss potential challenges: AI mistakes or “hallucinations” (plausible but incorrect code), integration hurdles, security/privacy concerns, and the need for human oversight.

Best Practices for Integrating AI Agents in Your Team – Tips for adoption: start with small pilot projects, choose the right tools, train developers in prompt engineering, enforce code reviews for AI outputs, and maintain data privacy (e.g. using on-premises AI solutions).

FAQs – Answer 6+ common questions (e.g. What are AI agents?, Will AI replace developers?, How to get started with AI agents?, Popular AI tools, Risks, Do you need to review AI-generated code?).

Conclusion – Summarize how AI agents are empowering full-stack teams, emphasize the augmentation (not replacement) of developers, and provide an optimistic outlook on co-creating software with AI.

Next Steps – Offer options to translate the article, generate accompanying blog images, or start a new article on a related topic.

Introduction

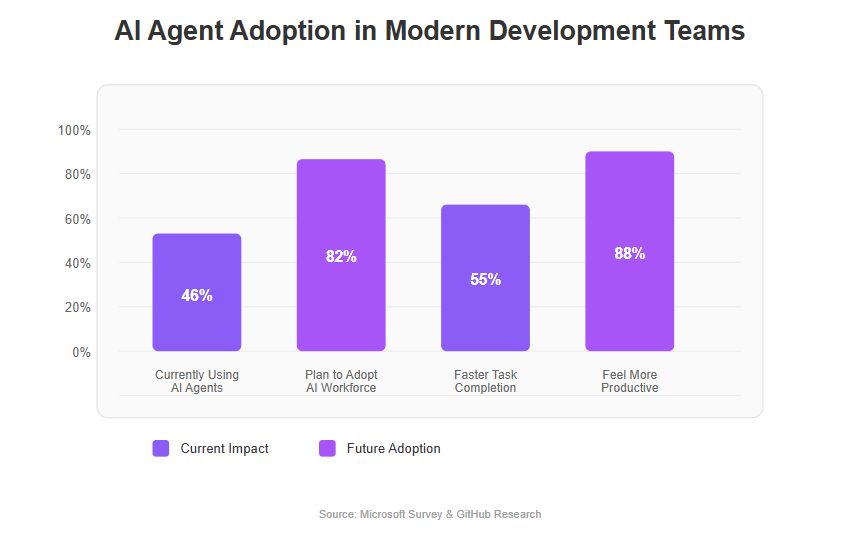

Imagine having a tireless, intelligent assistant on your software team that handles the boring and repetitive parts of development so your human developers can focus on the fun, creative stuff. This is no longer science fiction – it’s the reality of modern AI agents in full-stack teams. These AI-driven “teammates” are transforming how software is built by using AI to automate tasks, accelerate workflows, and enhance code quality. In fact, according to a recent Microsoft survey, 46% of business leaders say their companies already use AI agents to automate workflows, and 82% expect to adopt an “agentic workforce” with AI digital team members in the next year or so.

Businesses adopting AI agents are also experiencing a reduction in operational costs associated with customer service, allowing reallocations of resources. The momentum is clear: embracing AI agents can be a game-changer for productivity and innovation in full-stack development.

In this article, we will explore 7 real-world use cases for AI agents in modern full-stack teams (from coding assistance to DevOps and beyond) that illustrate how these autonomous systems are elevating software development. Each use case is backed by real examples and expert insights, showing how AI agents are already being put to work in development environments. By understanding these scenarios, you’ll see how full-stack teams – which juggle everything from front-end user experiences to back-end infrastructure – can leverage AI to work smarter, deliver faster, and build more reliable software. From an AI pair programmer that writes code 55% faster than a human, to an AI DevOps watchdog that catches issues before they cause downtime, the following sections shed light on concrete benefits of integrating AI agents into your workflow. Additionally, AI-powered virtual assistants can handle routine customer inquiries, acting as the first point of contact in customer service operations, further showcasing their versatility.

Before diving into the use cases, let’s clarify what we mean by “AI agents” in this context and why they’ve risen to prominence. Then, we’ll delve into each use case, provide practical examples, and highlight key advantages. By the end, you should have a clear picture of how AI agents can fit into your team’s toolset – not as a replacement for human developers, but as powerful assistants and collaborators. Let’s begin our journey into these seven game-changing use cases that are reshaping full-stack development in the real world.

What are AI Agents in Full-Stack Teams?

AI agents in software development are essentially intelligent software assistants that perform specific development tasks autonomously or semi-autonomously alongside human developers. Unlike a generic chatbot or a simple script, an AI agent has a defined role or goal within the development lifecycle and can make context-aware decisions. These agents are typically powered by advanced machine learning models – often large language models (LLMs) or other AI techniques – allowing them to understand code, documentation, and natural language instructions. In practice, they integrate directly into the tools and platforms developers already use (such as IDEs, code repositories, testing frameworks, or CI/CD pipelines) to help with work like coding, testing, debugging, documentation, and more.

AI agents represent a new class of intelligent software systems that operate autonomously within development environments.

Think of an AI agent as a “virtual team member” that can be assigned tasks. For example, you might have an AI agent act as a frontend assistant that builds UI components based on design specs, another as a testing agent that generates and runs test cases, and another as a DevOps agent that monitors system health. Each agent is task-focused, meaning it’s designed and trained to excel at a certain set of tasks rather than having broad general knowledge. This specialization is what makes them so effective. According to one definition, these agents can “write, debug, refactor, test, or document code by understanding prompts, project context, and development goals”. In essence, they operate with a degree of autonomy, handling routine or analytically complex tasks, and they continuously learn or adapt from data and feedback.

It’s important to distinguish AI agents from traditional automation scripts. Traditional automation (like a shell script or a hard-coded CI rule) will do exactly what it’s programmed to do – nothing more. If conditions change or something unpredicted happens, a simple script may fail or produce incorrect results. In contrast, an AI agent has a layer of “intelligence.” It leverages learned patterns and can make inferences, meaning it might handle slight variations in a task or optimize its actions based on past experience. For instance, an AI agent code assistant doesn’t just do simple text substitution; it actually understands context from your project and can generate code that fits the context or predict what you might need next. Developers can even converse with such agents (through comments or chat interfaces), assigning them roles like “Assistant: fix this bug in the payment module” or “Please document this API”, and the agent will attempt to carry out the instruction in a sensible way.

In summary, AI agents in full-stack teams are AI-driven collaborators integrated into the software development workflow. They act as autonomous assistants that augment human capabilities. They are not sci-fi robots taking over the job – rather, they handle well-defined tasks (often the boring, repetitive, or highly data-driven ones) and free up humans to focus on creative and complex aspects of building software. As we explore the use cases, this concept will become clearer through concrete examples.

Benefits of AI Agents for Full-Stack Development

Why are so many full-stack teams welcoming AI agents with open arms? The benefits are multi-fold and align perfectly with the pressures of modern software development. In today’s industry, release cycles are short, teams are lean, and quality expectations are high. AI agents offer much-needed relief by working faster, smarter, and around the clock. Here are some key benefits:

- 🚀 Speed and Productivity: AI agents can dramatically accelerate development tasks. They produce work in seconds that might take a human hours. For example, an AI coding assistant can suggest entire blocks of code or complete functions instantly. Research by GitHub found that developers using AI code completion finished tasks 55% faster than those without it. Moreover, in a survey of 2,000 developers, 88% said they felt more productive with AI assistance. This speed boost means teams can ship features faster and meet tight deadlines more easily. AI agents also aid in demand forecasting by analyzing historical sales data and market trends to optimize inventory levels, showcasing their ability to enhance operational efficiency across industries.

In essence, AI agents offer a compelling combination of speed, quality, and efficiency improvements. They help teams do more with less, which is especially crucial for full-stack teams responsible for a wide range of tasks (front-end, back-end, database, deployment, etc.). As we move into the specific use cases, these benefits will shine through in concrete scenarios. Each use case demonstrates a facet of these advantages in action, showing why adopting AI agents has become a top strategy for forward-thinking teams.

Use Case 1: AI-Powered Code Generation and Pair Programming

One of the most prevalent and impactful use cases for AI agents in development is AI-powered code generation – essentially having an AI “pair programmer” by your side. In a full-stack team, developers constantly switch between front-end and back-end code, writing everything from HTML/JSX templates to database queries. An AI coding assistant can significantly speed up this work by suggesting code as you type or even generating entire functions on command. Tools like GitHub Copilot, Amazon CodeWhisperer, Tabnine, and others exemplify this use case. They are trained on billions of lines of code and can autocomplete code snippets, generate code from natural language prompts, and offer suggestions for how to implement a given logic.

How it works: As you write code in your IDE, the AI agent predicts what you’re trying to do next by inferring your user intent. For example, if you start writing a function to fetch user data, the AI might suggest the entire REST API call code or SQL query after recognizing your intent. You can also explicitly ask, e.g., in a comment: “// TODO: sort users by registration date”, and the AI will attempt to generate the code to do that. This feels like collaborating with a knowledgeable colleague who anticipates your needs. In fact, developers often describe Copilot as a kind of AI pair programmer. It’s always there to brainstorm solutions or handle the straightforward parts of coding.

Real-world impact: The productivity gains from AI code generation are impressive. As noted earlier, an experiment found that programmers using GitHub Copilot were able to complete a coding task 55% faster than those who coded without it. The Copilot users not only finished quicker but also had a slightly higher success rate in completing the task at all. In large enterprises, such gains can translate to releasing features days or weeks sooner. Moreover, internal surveys show a vast majority of developers feel that these AI helpers make them more productive and less frustrated (by handling boilerplate), and even help them stay in “flow” while coding. In other words, the AI takes on the tedious parts of coding, letting humans focus on the interesting logic and edge cases. As one senior engineer put it, “With Copilot, I have to think less about the boilerplate, and when I have to think, it’s the fun stuff”.

Example: Suppose a full-stack developer needs to implement form validation logic on the front-end and corresponding checks on the back-end API. With an AI pair programmer, they could write a quick prompt like “validate email format and password strength” in a comment. The AI might then generate a JavaScript function that uses regex for email and checks password length/complexity. On the back-end (say in a Node.js/Express route), the developer could start typing an if-statement for validating input, and the AI suggests the complete validation code or even recommends using a library. This not only saves time writing code but also occasionally surfaces best practices or edge cases the developer might not have initially considered (like international email formats or a specific password rule).

Benefits for the team:

- The obvious benefit is speed – features get implemented faster. But another subtle benefit is that junior developers can learn from AI suggestions. It’s like having a mentor who shows how to use certain APIs or corrects your syntax in real-time. This can flatten the learning curve for newcomers.

- Consistency in coding style can also improve. If the AI has been tuned to your project’s patterns, it will output code that matches the existing style and conventions, reducing inconsistencies.

- Additionally, AI can help generate multiple possible approaches to a problem (you can ask for alternative suggestions), which fosters creativity and exploration without the manual effort of writing each approach out.

Of course, it’s not magic – the AI might sometimes suggest code that doesn’t perfectly fit the context or even contains minor errors. That’s why human oversight is still crucial (a theme we will revisit in the Challenges section and FAQs). But as a starting point or a way to speed through routine coding, an AI code generator agent is incredibly valuable. It’s like having a superpower for the coding phase of development, making it one of the most widespread AI agent use cases in modern full-stack teams.

Supporting evidence: GitHub’s data shows AI code assistants help developers code faster and with less mental effort, with developers completing tasks in significantly less time using tools like Copilot. In one survey, 88% of devs felt more productive with such an AI assistant. These stats underscore why AI pair programming has caught on so rapidly.

Use Case 2: Intelligent Code Review and Bug Detection

After the code is written, the next critical step is ensuring its quality – this is where code review and bug detection come in. AI agents are making a strong mark in this area by acting as tireless code reviewers that can analyze code changes for potential issues within seconds. In a full-stack project, where multiple modules and services are interacting, catching bugs or security vulnerabilities early is vital. AI-driven code review tools (such as DeepCode – now part of Snyk, SonarQube with AI plugins, Amazon CodeGuru, Qodana AI, Codacy AI, and newer offerings like GitHub’s code analysis with Copilot X) can automatically scan code for problems and even suggest fixes.

What these agents do: An AI code review agent uses machine learning models (often trained on large corpora of code and known bug patterns) to inspect your source code or a new commit. It looks for things like:

- Bugs and Logical Errors: e.g., null pointer dereferences, array index out of bounds, misuse of an API, copy-paste errors.

- Vulnerabilities: e.g., SQL injection risk, use of deprecated or insecure functions, hardcoded secrets.

- Style and Standards: ensuring the code follows certain style guides or best practices, which in turn affects readability and maintainability.

- Performance Issues: e.g., spotting an inefficient loop or an opportunity to use a faster library method.

These tools often integrate with your version control or CI system. For instance, when a developer opens a pull request on GitHub, an AI review bot can leave comments on the PR highlighting issues. Some can even auto-correct simple issues. IBM describes AI code review as using AI techniques to “assist in reviewing code for quality, style and functionality,” identifying inconsistencies and detecting security issues that developers might overlook. Crucially, they not only flag problems but sometimes provide suggested changes or fixes, acting like an expert reviewer guiding the author.

Traditionally, rule based systems have been used for code analysis, relying on predefined rules to catch common issues. However, AI agents now surpass these rule based systems by learning from data and identifying more complex patterns that static rules might miss.

Why it’s valuable: Human code reviews are great but not perfect – reviewers can have off days or blind spots, and in busy teams they might spend only a short time on each review. An AI agent, however, will diligently check every line against hundreds of rules and learned patterns. It provides a safety net, catching issues early in the development cycle. For example, an AI reviewer might catch that a developer forgot to handle an error condition, or used a suboptimal algorithm that will slow down in production. By catching such issues before the code is merged or deployed, AI agents help prevent bugs from reaching users (saving the team from fire-fighting later).

Real-world example: Consider a scenario where a developer writes a new feature with database access. The AI code review agent scans the changes and flags that the SQL query constructed in the code is not parameterized, which could lead to a SQL injection vulnerability. It might link to an explanation of the risk and suggest using parameter binding or a safer API. In another case, a developer may introduce a subtle bug by not initializing a variable under certain conditions; the AI agent recognizes a pattern that this could be a null reference error and alerts the team. These are issues a busy human reviewer might miss on a Friday evening, but the AI’s pattern recognition (trained on countless code examples and known bugs) is relentless and fast.

Impact metrics: The benefits here are somewhat qualitative but immensely important:

- Higher Code Quality: By enforcing standards and best practices uniformly, AI code reviewers improve the baseline quality of the codebase. This leads to fewer issues down the line and easier maintenance.

- Time Savings in Review: Reviewers can focus on design and architectural feedback while letting the AI handle nitpicks and trivial but important checks. Teams report faster code review cycles as a result – the AI flags the easy stuff, the human focuses on deeper issues, and overall review is completed sooner.

- Knowledge Sharing: AI suggestions often include explanations. Developers, especially juniors, can learn why something is a bug or a better way to do it from these AI comments. It’s like having an encyclopedic senior engineer pair reviewing every line of code.

- Security and Reliability: With security-focused AI scans, teams can achieve a level of security auditing that would be hard to do manually on each commit. This reduces the risk of vulnerabilities slipping into production. Likewise, reliability improves if AI catches race conditions or error handling omissions that could cause crashes.

Many companies already use such AI-driven analysis. For instance, DeepCode (an AI-based code analysis tool) has been shown to catch bugs that static analyzers might miss by learning from open-source projects’ bug fixes. Amazon CodeGuru reviews Java and Python code and even profiles running apps to point out inefficient code paths. These tools embody this use case’s real-world adoption.

To put it simply, an AI code review agent acts as an automated second set of eyes. It doesn’t get tired or bored, and it can examine the code at a granular level of detail that humans might not sustain. By using one, full-stack teams can significantly elevate their code quality and prevent costly bugs, all while relieving human reviewers from some of the tedium.

Supporting evidence: AI-based code review tools help developers “save time and improve code quality” by providing suggestions and automated fixes. They integrate into development workflows (IDE or CI) to continuously maintain standards. Examples include not only new AI tools but also augmented traditional tools (like SonarQube with AI plugins) that bring machine learning insights into the review process. This shows the industry trend of embedding AI into the code review stage for better outcomes.

Use Case 3: Automated Testing and Quality Assurance

Testing is a crucial part of full-stack development – it ensures that features work as expected and that changes don’t break existing functionality. However, writing comprehensive test cases and managing test data can be time-consuming. AI agents are stepping up in the Quality Assurance (QA) domain by automating test generation, test maintenance, and even test execution analysis. In this use case, AI becomes your test writer and assistant QA engineer.

AI-generated unit tests: One of the remarkable capabilities of modern AI agents is automatically creating unit tests for your code. Given source code or even just function signatures, an AI can generate test functions that cover various scenarios and edge cases. For example, Diffblue Cover is an AI agent specialized in Java unit test generation. It reads through Java classes and methods and then writes JUnit tests that execute those methods with different inputs, asserting the expected outputs. According to Diffblue, their AI can generate unit tests “250x faster than a human”, and they have case studies like Goldman Sachs using it to write a year’s worth of unit tests overnight. This highlights how powerful this use case is in practice – what might take a QA team weeks or months (writing hundreds of tests) could be done by an AI agent in hours, liberating human testers to focus on more complex testing strategies.

How it works: An AI testing agent typically uses a combination of static code analysis and learned heuristics to determine what needs to be tested. It identifies the key functionality of a function or module – e.g., if a function calculateTax(amount, state) should return a certain value for given inputs, the agent generates multiple calls with different amount and state and asserts the outcomes. It tries to cover boundary conditions (like negative amounts, very large amounts, unknown state codes) – things developers might forget. AI can even generate fake or mock data needed for tests, such as dummy objects or random strings, ensuring tests are self-contained. Tools like Microsoft’s IntelliTest (for .NET) or academic projects have explored using AI to systematically generate inputs that achieve high code coverage. In addition to generating tests, these AI agents also handle task execution by running the generated tests and reporting results automatically.

Benefits:

- Improved Test Coverage: AI can help achieve much higher code coverage by generating tests for parts of the code that lacked tests before. It doesn’t get bored writing the tenth variant of a similar test case. As a result, teams get a more thorough regression suite. LambdaTest notes that AI-generated test cases “cover a wider range, including edge cases that testers often overlook,” and target each line of code for coverage.

- Time and Cost Savings: Writing tests is often a bottleneck, and when deadlines loom, tests might be cut short. AI agents mitigate this by taking on the bulk of test creation, reducing manual effort and saving costs (since bugs caught in testing are far cheaper than bugs caught in production). Test maintenance is also aided – when code changes, some AI agents can update the tests accordingly.

- Consistency: Just like code style, test style and thoroughness become consistent when an AI is generating them following a standard pattern. This leads to reliable, repeatable QA processes. It also enforces a culture where testing isn’t an afterthought; the AI has already put tests in place for new code.

Beyond unit tests: AI in testing isn’t limited to unit tests. Agents can generate API tests, integration tests, and even UI tests to some extent. For example, the earlier referenced Postman AI (called “Postbot”) can analyze API specifications and example responses to generate test scripts that validate those APIs. This is incredibly useful in a full-stack context: while front-end and back-end are being developed in parallel, you can use an AI agent to create a suite of API tests against a mocked server, ensuring that once the real back-end is connected, it meets the front-end’s expectations. Another scenario: for web UI testing, some AI tools can observe user flows and create Selenium or Playwright scripts automatically to emulate those flows for testing.

Real-world story: A large enterprise might have thousands of legacy code functions with minimal tests. Writing tests for all of them manually is impractical. But an AI agent can systematically go through and start producing tests for each function (especially pure functions or those with clear input-output behavior). One report mentioned that after adopting an AI test generation tool, a team saw their code coverage jump from nearly 0% on some components to over 70% in a short period. The AI generated dozens of tests and even found a few hidden bugs in the process (because when running the new tests, some failed – indicating the code had issues that nobody noticed before).

QA analysis and triage: Another angle is using AI to analyze test results. Imagine your continuous integration runs thousands of tests and some fail. AI can help cluster failures by root cause or analyze logs to pinpoint likely reasons. It can also prioritize which failing tests are most critical. Some advanced AI ops tools even attempt to automatically fix failing tests (for instance, if a test is flaky due to timing issues, an AI might detect that pattern and adjust the wait times or synchronization in the test).

Generating test data: AI agents can create realistic test data (names, addresses, randomized but valid inputs) or even synthetic datasets. This is useful for seeding development and staging environments with non-production data that still looks realistic for testing purposes.

Overall, AI in testing means higher quality software. With AI agents ensuring that every corner of the code is exercised and validated, full-stack teams can merge changes with greater confidence. It reduces the QA burden on developers (who often would prefer to write code than tests) and dedicated QA engineers can focus on exploratory testing or complex scenario testing that AI might not handle.

Supporting evidence: AI test generation tools claim substantial improvements: one AI tool generated 10× more tests and four times faster than a popular code assistant, and can enable developers to spend “95% less time on writing tests”. Furthermore, AI-created tests cover edge cases humans might miss, thereby improving reliability. These facts highlight why automated testing via AI agents is a real-world use case seeing rapid adoption.

Use Case 4: AI-Assisted DevOps and Infrastructure Management

Modern full-stack teams don’t just write code – they also handle deployment, infrastructure, and monitoring (especially in DevOps or “you build it, you run it” environments). AI agents have emerged as powerful allies in the DevOps arena, a field often termed AIOps when infused with AI. The goal here is to maintain system health, optimize resource usage, and respond to incidents faster than ever, by letting AI analyze the avalanche of data that systems produce.

Intelligent monitoring and anomaly detection: Production systems generate logs, metrics, and traces continuously. AI agents can be tasked with monitoring this data for anomalies – patterns that indicate something might be going wrong. For example, an AI agent can learn the normal range of response times for a web service and raise an alert if latencies spike unexpectedly (possibly indicating a performance regression or an ongoing attack). Unlike traditional monitoring which relies on static thresholds, AI can adapt to patterns and catch subtle deviations. AIOps platforms like Dynatrace, DataDog with AI extensions, or IBM’s Watson AIOps use machine learning to reduce “noise” in alerts (filtering out false alarms) and highlight the real issues that need attention.

According to xMatters (an incident management platform), AI-driven systems “quickly identify and resolve issues” by combining real-time anomaly detection with automated root cause analysis. This means when an issue occurs, the AI doesn’t just page an engineer saying “something’s wrong,” but might actually pinpoint where and why it’s wrong. For instance, it might correlate a spike in error logs to a recent deployment or a specific microservice, saving the team precious debugging time.

Automated incident response: Some AI agents can go a step further and automatically execute remediation steps for known types of incidents. For example, if an AI detects that a web server is hung (no requests are succeeding for 5 minutes) and identifies a memory leak as the likely cause, it could pro-actively trigger a restart of that service or scale up new instances, before the on-call engineer even gets to their keyboard. This capability greatly minimizes downtime. A report on AIOps use cases cites “real-time anomaly detection, automated root cause analysis, and predictive insights” as primary uses that “swiftly identify and resolve incidents, minimize downtime, and enhance reliability.”. Essentially, AI agents help keep systems running smoothly without constant human supervision.

Resource optimization and forecasting: Full-stack apps often scale up and down or need the right allocation of servers/containers to handle load. AI can analyze usage patterns and predict future needs – this is capacity planning on steroids. An AI agent might observe that traffic increases by 30% every Friday evening and could suggest preemptively adding more server capacity on Fridays to avoid slowdowns. It can also identify over-provisioned resources (say a database server that’s always at 5% CPU – maybe it’s oversized and costing money unnecessarily). By forecasting and optimizing, AI helps teams save on infrastructure costs while ensuring performance. xMatters mentions “predict future resource needs and prevent over/under-provisioning” as a benefit of AI in resource management. These AI-driven optimizations lead to improved operational efficiency for full-stack teams by streamlining workflows, reducing manual intervention, and enhancing system availability.

Security and DevSecOps: Monitoring isn’t just for performance – AI agents also keep an eye on security signals. They can detect suspicious patterns like a sudden flood of failed login attempts (possibly a brute force attack) or unusual data access patterns (potential insider threat or data exfiltration). These agents can raise immediate alerts or even block activity if configured to do so. AI’s ability to analyze large volumes of data quickly means it might catch a security incident that would be hard for humans to notice among thousands of normal log entries.

CI/CD pipeline optimization: Some AI agents assist in the build and deployment pipeline. For instance, analyzing build logs to find flaky tests or identifying which tests are likely to fail given a certain code change (so you run only those and get faster feedback). AI can also optimize artifact caching or container build steps to speed up continuous integration. While these are more behind-the-scenes improvements, they contribute to a faster and more reliable delivery process.

Real outcome: Teams using AIOps have reported significant reduction in alert noise (so engineers get perhaps 80% fewer false alarms), faster incident resolution (mean time to resolve incidents dropping due to better diagnostics), and improved uptime. For example, one might find that an AI agent automatically resolved 3 minor incidents overnight that previously would have woken up an on-call engineer – that’s a huge quality of life improvement for the team and better service continuity for users.

Consider a concrete scenario: A full-stack e-commerce application experiences a sudden slow-down. Traditionally, developers would scramble, looking at different dashboards to guess the cause. But an AIOps agent simultaneously notices an uptick in database query timeouts and an error in the payment service logs, correlates them, and identifies that a specific microservice (inventory service) is causing a bottleneck due to a lock contention. It alerts the team with this specific insight. The team can then quickly zero in on that inventory service bug rather than trying to figure out which part of a complex system is at fault. In some cases, the AI might even trigger a mitigation – e.g., route traffic away from the problematic service or recycle it.

In short, AI agents in DevOps act like vigilant guardians of your application’s health. They process far more monitoring data than a human could, react faster, and even take intelligent actions. Full-stack teams benefit by having more stable and efficient deployments, and by spending less time firefighting and more time building new features.

Supporting evidence: The use of AI in operations shows clear benefits: it can “reduce mean time to resolution (MTTR) and enhance system reliability” by proactive monitoring. AI-driven alerting systems “reduce noise and prioritize significant notifications,” preventing alert fatigue. Additionally, AI’s ability to “analyze thousands of metrics in real-time” and trigger responses immediately when abnormal behavior is detected has been noted to strengthen system uptime and customer experience. These references reinforce that AI agents are effectively improving DevOps practices in real organizations.

Use Case 5: AI for Documentation and Knowledge Management

Documentation is the lifeblood of any full-stack project – from API docs and architectural diagrams to code comments and user guides. Yet, many developers find writing and maintaining documentation tedious, and documentation often lags behind the code (“documentation drift”). Here enters the AI agent as a documentation assistant, ensuring your team’s knowledge base is always up-to-date and accessible.

Automated code documentation: AI agents can generate human-readable explanations for code, essentially acting like a documenter. These agents leverage contextual data from codebases and project history to generate accurate and relevant documentation. For example, given a piece of source code, an AI can produce a summary of what the code does, the purpose of the function, descriptions of parameters, etc. Tabnine’s AI documentation blog defines this as using AI “to create, manage, and update documentation for software projects,” where the AI “understands codebases and generates explanations and documentation that accompany the code”. This means if you have a large legacy module with little documentation, an AI agent could scan it and produce a draft README or inline comments explaining each part. It’s like a smart documentarian that reads code as fluently as a human and writes about it.

Updating docs continuously: One big issue in fast-moving projects is that as code changes, documentation (like design docs or user manuals) often doesn’t get updated right away, leading to inaccuracies. AI agents can mitigate this by monitoring code changes and either suggesting documentation updates or even automating them. For instance, an AI could detect that you added a new API endpoint and automatically add that endpoint’s details to the API reference docs, including request/response examples, by analyzing the code and tests. By doing so, AI helps prevent documentation drift, where docs fall out-of-sync with the code. This ensures developers and new team members always have correct information to refer to, and external stakeholders (like users of an API) get accurate docs.

Natural language querying of knowledge: Another knowledge management boost is using AI (like GPT-based chatbots) as an interface to your team’s collective knowledge. Companies are deploying internal chatbots trained on their documentation, wikis, and even Slack history. Instead of digging through confluence pages, a developer can ask the AI, “How do I set up the dev environment for Project X?” and get an instant answer drawn from the docs. This is an AI agent acting as a knowledge concierge, improving productivity especially for onboarding or when working across multiple repos.

Generating user-facing documentation: For full-stack teams, not only internal docs but also user-facing ones (like user manuals, FAQs, release notes) can be drafted by AI. For example, when a new feature is implemented, an AI could generate a draft of the feature announcement or update to the user guide by summarizing the Git commit and issue tracker information. The team can then fine-tune the wording, but the heavy lifting is done.

Consistency and style enforcement: Documentation written by dozens of developers can be inconsistent. AI documentation tools can enforce a consistent tone and format. They can be trained on your preferred style guide. As Tabnine’s article notes, AI can “enforce consistent terminology and style across all documentation automatically”. This results in cleaner, uniform documents which are easier to read.

Practical example: Suppose your full-stack application offers a REST API for third-party developers. Keeping the API docs in sync with code changes is painful. An AI agent plugged into your CI pipeline could detect changes to API controllers and automatically update an OpenAPI/Swagger specification or markdown docs. It writes out the new endpoints, what they do (from code comments or analysis), and sample requests. It might also flag if any example responses need updating due to code changes. Additionally, for internal use, if a developer is unsure how a complex function works, they could ask an AI documentation agent, “Explain function processOrder() in simple terms,” and the agent would generate a summary.

Another scenario: a new engineer joins the team and needs to understand the architecture. Instead of reading a 50-page outdated doc, they query an AI agent trained on the current architecture diagrams and code to ask, “How does the authentication flow work?” The agent returns a concise explanation with references to relevant modules, saving hours of digging.

Knowledge base maintenance: AI agents can also help curate FAQs or troubleshoot runbooks by analyzing past support tickets or Q&A in forums. They might suggest adding certain Q&As to the documentation if they notice repeated questions (like “How do I reset my password?” appearing often, an AI could ensure the help docs prominently address it).

Impact:

- Efficiency: Developers spend less time writing docs and more time coding, without sacrificing documentation quality. The documentation process, often a backlog item, becomes almost instantaneous alongside coding.

- Onboarding speed: New team members or even new users can get up to speed faster with AI-curated documentation and Q&A agents.

- Reduced errors: Up-to-date documentation means fewer mistakes due to following outdated instructions or interface specs. Trustworthiness of docs increases.

- Better user experience: If your team provides APIs or SDKs, having thorough and current documentation (thanks to AI) improves adoption and satisfaction for those users.

Of course, human review of AI-written docs is wise – to ensure accuracy and clarity – but it’s much easier to edit an AI draft than to write from scratch. And as these agents learn from corrections, their output gets better over time.

Supporting evidence: AI documentation tools are already available and highlight major benefits: “automate the documentation process, reducing the time and effort required”, and “significantly reduce the occurrence of documentation drift” by updating docs as code evolves. This shows that in real-world usage, AI keeps documentation aligned with software changes. Additionally, by maintaining consistency and providing natural language explanations, AI agents make documentation more accessible and maintainable. These improvements build a strong case for deploying AI agents in the documentation workflow of full-stack teams.

Use Case 6: AI in Project Management and Team Coordination

Full-stack development isn’t just about code – it’s also about effective project management: planning sprints, tracking progress, and keeping everyone on the same page. AI agents have begun to assist here as well, acting as virtual project managers or team assistants that streamline coordination and communication.

Scheduling and meeting facilitation: One mundane task in teams is scheduling meetings or stand-ups that work for everyone, especially across time zones. AI scheduling assistants (like x.ai or calendar plugins with AI) can take that burden off by finding optimal meeting times. But beyond just scheduling, imagine an AI agent that participates in meetings: it could transcribe discussions, highlight action items, and even send follow-up emails with summaries. Some teams use AI transcription and analysis tools during design discussions or retrospectives – the AI produces minutes of the meeting and extracts decisions made or tasks to be done.

Task management and prioritization: AI can help project managers by intelligently prioritizing the backlog. For instance, it might analyze user feedback or past sprint velocity to suggest which features or bug fixes should be tackled first for maximum impact. An AI agent integrated with tools like Jira or Trello could automatically update task statuses based on commit messages (e.g., mark a ticket as in-progress or resolved when it detects a linked commit). More advanced, it could predict if the team is at risk of missing a deadline by looking at current progress and historical trends, prompting early warnings.

One example from the Voiceflow blog on AI agents describes “Virtual project managers [that] use AI to facilitate meetings, manage tasks and track deadlines. They automate scheduling, send reminders, and provide updates on project progress, ensuring all team members are aligned.”. This sums it up well: the AI agent can keep everyone informed – for instance, automatically posting a daily summary in Slack of what was completed yesterday and what’s on tap today (by scanning commit logs, merge requests, and calendar events).

Cross-team communication: In larger organizations, AI agents can route queries to the right people. If a developer has a question that the AI can’t answer from documentation, an agent could determine the best person in the company to answer and forward the query, saving time in hunting down the right expert.

Risk management: AI agents use data analysis to process project data (like requirement complexity, historical bug rates, etc.) and predict risks – e.g., “Feature X is complex and similar past features slipped by 2 weeks, consider adjusting the timeline or adding another developer.” This kind of insight helps project managers make data-driven decisions.

Burnout detection and workload balancing: On a more human note, some companies explore AI tools to gauge team health. By looking at factors like who is doing many overtime commits or who has had a heavy task load for multiple sprints, the AI might flag potential burnout situations to the manager so they can redistribute work or give someone a break.

ChatOps and assistant bots: Many teams use chat platforms (Slack, Teams) heavily. AI agents can live in these as chatbots. For example, you could ask in a chat: “@AI-Bot, what’s the status of the frontend deployment?” and it could reply after checking CI/CD pipelines. Or “@AI-Bot, list open pull requests that need review” and it gathers that information. This saves time switching contexts and searching through different tools.

Team training and support: If a team member is struggling with a certain tech or tool, an AI agent might notice (e.g., via many errors or questions) and proactively suggest training materials or a mentor who’s an expert. It’s a bit futuristic, but not far-fetched with the data accessible to AI.

Real-world example: Companies have started integrating AI copilots into tools like Microsoft Teams, where during a meeting, the AI will create a to-do list from what was discussed. Or consider daily stand-ups: an AI could collect each engineer’s updates (perhaps from a quick form or even by parsing yesterday’s Git commits and today’s calendar) and present a consolidated stand-up report, even highlighting blockers (e.g., “Alice’s task is waiting on Bob’s database migration to be finished”). This agent might also send a polite nudge to Bob, reminding him of Alice’s dependency.

Voiceflow’s example of a virtual PM agent underscores improvements in collaboration and efficiency: by automating reminders and progress updates, “all team members are aligned and informed”, and by analyzing performance data to identify bottlenecks, the agent helps optimize workflows. Essentially, it reduces the managerial overhead and ensures nothing falls through the cracks.

For a full-stack team, which often juggles multiple priorities (front-end feature, back-end refactor, urgent bug, etc.), having an AI co-manager means:

- Fewer missed deadlines (because the AI warned you in advance).

- Fewer status meetings (because status is automatically gathered and shared).

- More focus on actual development rather than administrative tasks.

- No more “oops I forgot to update that Jira ticket” – the AI can do it for you as work progresses.

Supporting evidence: The concept of an AI project manager is already being applied. One source describes that these agents “moderate meetings, manage tasks and deadlines… automate scheduling, send reminders, and provide progress updates”. They even “generate reports and summaries of meetings, allowing teams to focus on strategic discussions rather than administrative tasks.”. The result is improved collaboration and more efficient project execution. These real-world functions show that AI can handle a lot of the coordination workload, which is a boon for full-stack teams aiming to stay agile and in sync.

Use Case 7: AI-Driven User Experience and Feedback Analysis

For a full-stack team, understanding the user experience (UX) and responding to user feedback is paramount. AI agents can aid in both analyzing feedback at scale and even contributing to the design process, ensuring that the end product delights users.

Feedback analysis at scale: Modern applications receive feedback from many channels – app store reviews, support tickets, surveys, social media, etc. Manually sifting through thousands of comments to extract common pain points or feature requests is daunting. AI agents excel at natural language processing, and companies are using them to conduct sentiment analysis and topic clustering on user feedback. For instance, an AI can read all customer support tickets from the last month and tell you that the top three sources of user frustration are: a slow checkout process, difficulty using the profile settings page, and lack of dark mode. It might even quantify sentiment (e.g., “overall user satisfaction dropped 5% after the last release”) and pinpoint which changes caused it.

Zendesk (a customer support platform) notes that AI-based feedback analysis “consistently [identifies] trending customer needs, pain points, and preferences,” flagging negative sentiment in real time. This helps businesses be proactive – for example, if the AI agent alerts that a new feature is getting a lot of negative feedback in its first 24 hours, the team can react quickly, perhaps rolling back or patching the issue, rather than finding out weeks later via anecdata. AI can also parse feedback and map it to specific features or components, giving precise direction to full-stack teams on where to focus improvements.

Voice of Customer and prioritization: By aggregating what users say, AI agents help prioritize the roadmap. Suppose the AI finds that 40% of user comments mention “search function not working well.” That’s a clear signal to prioritize enhancing the search feature. Without AI, you might not catch that trend until much later or with a gut feeling. The AI provides data-driven justification for decisions. It also democratizes feedback – not just the loudest voices or the one big client, but a broad view of all users’ voices.

Personalization and UX optimization: On the other side, AI agents can also work within the application to personalize user experience. AI agents analyze user behavior to tailor the user experience and optimize engagement. For example, an AI agent can analyze a user’s behavior on your platform and adjust the interface or content accordingly (like recommending relevant items, or simplifying the UI for a novice user). While this is more of a product feature, the full-stack team would implement and use such an AI. Think of streaming services that use AI to personalize recommendations – that’s an AI agent optimizing UX on the fly.

AI-assisted design and prototyping: The creative process of UI/UX design can also get a boost from AI. There are AI design tools that generate UI layouts from prompts or hand-drawn sketches (such as Uizard, Galileo AI, etc.). A designer or developer can say “AI, create a dashboard interface with a navigation bar, stats overview, and recent activity list” and get a prototype in seconds. While it may not be final, it provides a jumping-off point. This can speed up iterations – the team can quickly get user feedback on an AI-generated prototype before refining it. For example, Uizard is marketed as “the world’s first AI-powered UI design tool” and can generate entire sets of screen designs from a prompt. Such tools also often come with AI chatbots to refine designs (“make that button bigger”, “change the color scheme to dark”), which feels like having an AI design assistant.

Usability testing with AI: Another emerging use is using AI to simulate user interactions for usability testing. For instance, heatmap prediction tools use AI to predict where a user’s attention will go on a page (based on visual elements), helping designers optimize layout before real user testing.

Real-world impact: A company leveraged AI feedback analysis to overhaul a major feature after the AI revealed most users found it confusing. By quantifying the extent of the issue (70% of feedback about that feature was negative), they justified dedicating an entire sprint to redesign it. Post-fix, the AI measured improved sentiment scores, validating the effort. This closed-loop of feedback → AI analysis → action → AI re-evaluation leads to continuous UX improvements grounded in data.

Likewise, a startup used AI prototyping to generate multiple design ideas for their mobile app in one afternoon, something that would have taken their single UX designer weeks to sketch. They then quickly tested those prototypes with a small user group to choose a direction. The AI accelerated the creative process significantly.

User support and chatbots: Let’s not forget an AI agent that directly interacts with users – chatbots. Many full-stack teams integrate AI-driven chatbots on their websites or apps to handle common support queries. This reduces workload on support teams and provides instant help to users. While not a development task per se, building and refining such a chatbot becomes part of the full-stack team’s responsibilities. Modern AI chatbots (powered by GPT-like models) can handle quite complex questions, improving user experience by providing on-the-spot assistance or tutorials.

In summary, AI for UX and feedback ensures that the product is aligned with user needs and allows teams to iterate quickly. It’s like having a real-time pulse on user satisfaction and an assistant that helps craft better experiences. In the competitive world of software, this can be a distinguishing factor – the teams that respond fastest to user feedback and fine-tune their UX will win user loyalty.

Supporting evidence: AI-driven customer feedback analysis “helps businesses gather feedback, understand it, and act on it faster,” enabling a better customer experience. By identifying trends and sentiments in real-time, AI allows teams to be one step ahead in meeting user needs. On the design side, tools like Uizard demonstrate that AI can generate and even iterate on UI designs, showing how automation is entering the UX design domain. These sources highlight that from analysis to creation, AI agents are deeply influencing how teams approach user-centric development.

Challenges and Considerations when Using AI Agents

While AI agents bring many benefits, it’s important to approach them with a clear understanding of their limitations and risks. Adopting AI in a full-stack team isn’t flip-a-switch magic; it requires managing certain challenges to truly reap the rewards. Here are key considerations:

- Accuracy and “AI Hallucinations”: AI models, especially generative ones, can sometimes produce outputs that look convincing but are actually incorrect or nonsensical – a phenomenon often called hallucination. For example, an AI code assistant might generate code that seems plausible but doesn’t actually compile or work logically. Microsoft engineers observed AI coding tools producing “code that doesn’t compile, or algorithms that contradict themselves,” even making up nonexistent functions at times. These “plausible but incorrect” suggestions mean that blindly trusting AI output is dangerous. Human verification is essential. Teams must double-check AI contributions – whether it’s code, documentation, or analysis. Think of AI as a junior developer: fast and often right, but needing oversight.

- Bias and Data Quality: AI agents learn from data, and if that data contains biases or errors, the AI can perpetuate or even amplify them. For instance, if an AI was trained on code that often skipped accessibility considerations, it might not suggest accessible design solutions. Or an AI analyzing user feedback might misinterpret sentiment if the training data is skewed. It’s crucial to be aware of how the AI was trained and to monitor outputs for biases (like favoring one style or cultural context over others). Using AI responsibly may involve curating training data or fine-tuning models on more representative datasets.

- Security and Privacy: Integrating AI can introduce security considerations. A major one is data privacy – many AI coding assistants or services operate in the cloud, so sending your code to them could be a concern if the code is proprietary. There’s also the risk of AI inadvertently exposing sensitive information (for example, including a password from a config file in a generated snippet or documentation). Solutions like on-premises AI agents or self-hosted models can mitigate this; for example, Diffblue’s tool explicitly runs on-premise so your code never leaves your environment. Teams should ensure that using AI doesn’t violate any data handling policies and that sensitive data is protected (perhaps by anonymizing data before AI analysis, especially in user feedback or logs).

- Integration Effort: Implementing AI agents can require setup and integration work. An AI agent isn’t always plug-and-play; it might need customization to fit your project’s context or APIs to hook into your systems. There could be a learning curve for the team to effectively use the AI’s capabilities (e.g., learning how to write good prompts for best results). Also, maintaining the AI tools (keeping models updated, tuning parameters) can become a new part of your devops.

- False Sense of Security: There’s a psychological aspect – when AI agents become part of the workflow, developers might become a bit over-reliant or complacent, assuming “the AI checked it, so it must be fine.” This can be risky. For example, an AI code review might miss a certain category of bug, so developers should not forgo their own careful review. While AI agents can operate with minimal human intervention, human oversight remains essential to ensure quality and safety. Striking the right balance is important: trust the AI to assist, but verify the critical things yourself.

- Performance and Cost: Some AI tasks can be resource-intensive. Running large models, especially on-prem, may require beefy hardware (GPUs) or incur cloud costs. If you have an AI agent running continuously (like an AIOps agent processing streams of data), ensure you account for the computational cost and any latency it might introduce. Sometimes there’s a trade-off: do you let the AI analyse every single build which might slow it by 30 seconds, or do you run it nightly? Those decisions depend on team priorities.

- Ethical and Compliance Issues: Particularly for generative code tools, there have been questions about licensing (e.g., if the AI was trained on GPL code, is its output an issue?). Teams should stay updated on legal guidance around AI-generated content in their domain. Also, for user-facing AI features (like chatbots), one must ensure they don’t output inappropriate or harmful content, which would harm user trust.

- Changing Team Dynamics: Introducing AI agents might change workflows and roles. Some manual tasks reduce (good), but team members might need to upskill to work effectively with AI. For instance, QA engineers might transition more into overseeing AI test generation and focusing on higher-level testing. It’s important to communicate clearly within the team about the role of AI – that it’s there to augment, not to judge or replace. This helps in getting team buy-in and alleviating any fears.

Mitigation strategies:To handle these challenges, teams can establish guidelines such as:

- Always do a code review for AI-written code (perhaps label AI-originated pull requests clearly).

- Use AI suggestions as a starting point, not an absolute answer; encourage a mindset of “critically examine AI output”.

- Maintain a feedback loop: if the AI makes a mistake or an odd suggestion, feed that back if possible (some systems allow giving a thumbs-down or corrective feedback to improve future results).

- For data privacy, either opt for local AI solutions or use services that provide strong privacy guarantees. Mask sensitive info in data fed to AI.

- Keep humans in the loop for final decisions, especially in areas like architectural design, where AI can propose but human experience should validate.

In practice, many teams find that with these precautions, the benefits far outweigh the downsides. It’s like adopting any new powerful tool – understanding its failure modes is key. Just as early software had bugs and needed careful use, AI agents require us to adapt and use them wisely. The good news is that awareness of these challenges is growing, and tools are improving (for example, some AI coding tools now cite sources for their code suggestions to increase trust, or have settings to avoid using certain licenses).

Supporting evidence: Experts have highlighted that AI-generated code can be “plausible but non-functional,” and less experienced devs might be misled by the AI’s confident output. Hallucinated code can introduce inefficiencies or security issues if unchecked. This reinforces that review and testing of AI outputs is non-negotiable. On the privacy front, solutions like Diffblue emphasize keeping code local to avoid IP risks, showing that vendors are aware of and addressing these considerations. By being mindful of such challenges, teams can responsibly integrate AI agents and maintain trustworthiness in their development process.

Best Practices for Integrating AI Agents in Your Team

Understanding the key components of AI agent integration is crucial for successful adoption in full-stack teams.

To successfully leverage AI agents in a full-stack team, it’s crucial to follow some best practices. These will help maximize benefits while minimizing hiccups and risks during integration:

- Start Small and Target High-Impact Areas: Rather than rolling out AI everywhere on day one, identify one or two areas where an AI agent could immediately help. For example, you might start with an AI code assistant in your IDE or an AI test generation tool for one module. Pilot it, gather feedback from the team, and measure improvements. Starting small allows you to fine-tune the integration process. Choose a use case that’s pain-point heavy (lots of repetitive work or frequent errors) – success there will build momentum for broader adoption.

- Train and Educate the Team: Ensure team members understand how to use the AI tools effectively. This might involve a short training session or sharing guidelines. For instance, teach developers how to write effective prompts for the coding assistant (“explain to the AI what you intend, e.g. ‘function to calculate monthly payment given principal, rate, years’ to get a better suggestion”). Likewise, educate on verifying outputs – e.g., if Copilot suggests code, review it as if it came from a colleague. Encourage a culture of collaboration with AI: treat the AI as a helpful assistant whose work you appreciate but verify. This mindset will help everyone get comfortable.

- Customize AI Agents to Your Context: Many AI tools allow customization or configuration. Use this to your advantage. For instance, integrate your code style guide into the AI code review tool so it comments according to your rules. Or feed your project’s glossary of terms into a documentation AI so it uses the correct naming consistently. If you have the resources, fine-tune language models on your own codebase or domain-specific data – this can significantly improve relevance. The more the AI understands your project’s context (frameworks used, coding conventions, business domain), the more accurate and useful its outputs will be.

- Establish Quality Checks and Feedback Loops: Implement checkpoints so AI outputs are vetted. For example, you might require that all AI-generated code goes through the normal PR process with at least one human reviewer (which is standard anyway). For AI-generated documentation, maybe designate a technical writer or dev to quickly sanity-check it before publishing. If the AI agent is giving false positives or useless suggestions, gather those cases and provide feedback to the vendor or adjust its configuration. Many tools improve over time if you give them thumbs-up/down on suggestions, so don’t ignore that feature – use it to make the AI better align with your needs.

- Define Clear Roles for AI vs Humans: Clarity helps adoption. Communicate to the team: “We’re using the AI to handle X, Y, Z tasks, while developers should focus on A, B, C.” For example, “The AI will draft the initial unit tests, and developers will refine them” or “The AI bot will triage low-priority support questions, and anything it can’t answer goes to the human team.” This removes ambiguity and anxiety. It also helps in planning – maybe you allocate fewer hours for writing documentation if the AI is doing 80% of it, but allocate those hours to reviewing AI’s docs and doing deeper design work.

- Monitor Impact and Metrics: Keep an eye on how AI integration is affecting your workflow using concrete metrics. If using an AI coding assistant, measure things like code review comments (“Are we seeing fewer trivial issues?”), or developer survey results on how much time they spend on certain tasks before vs after. If using AIOps, monitor MTTR (Mean Time to Recovery) for incidents before and after introduction. These metrics will help justify the AI investment and also highlight any negative trends (e.g., if bug count went up after using AI, you might need to adjust processes). In one case, GitHub’s research measured that task completion time improved by 55% with AI help – you can run smaller internal tests to see if you observe similar boosts.

- Encourage Experimentation but Set Boundaries: AI in development is still a rapidly evolving area. Encourage team members who are enthusiastic to experiment with new features of the AI tools or even new AI tools (in a sandbox or safe environment) – they might find even better ways to leverage AI. However, set boundaries to maintain quality and security. For instance, allow experimenting with AI to generate code, but insist that no code goes into production without review and testing. Or allow trying an AI service, but ensure any proprietary data is anonymized or not shared without approval.

- Keep Security and License Compliance in Mind: As a best practice, configure AI tools to avoid suggesting known vulnerable code or incompatible licenses. Some code assistants can be set to stricter modes where they don’t regurgitate large verbatim code from training (reducing license risk). Continue to run your security scans and license checks on the codebase – AI-generated code is not exempt from those.

- Document AI Usage and Decisions: Meta-documentation can help. Keep a page in your wiki about “How we use AI in our team” noting which tools are used, for what, and any gotchas. Also, if the AI provides a solution that the team adopts, it can be worth noting in comments or documentation that it was AI-suggested (if not obvious). This historical note could be useful if you later need to understand the origin of a piece of code or content.

By following these best practices, teams often find the integration of AI agents goes smoother and yields more consistent benefits. The idea is to augment your software development lifecycle step by step, not disrupt it chaotically. When done thoughtfully, AI agents start to feel like an integral part of the team’s toolkit – just like a version control system or a testing framework – rather than some alien technology. And teams that master this integration put themselves at a competitive advantage in terms of speed, quality, and adaptability.

Supporting evidence: While best practices are generally compiled from industry experience, aspects of them appear in sources: for instance, experts advise to “critically examine results” and treat AI suggestions with a discerning eye. Also, data shows more benefit when users engage with the AI (e.g., providing context for it, or checking its work) rather than using it blindly. The high success rates (like 78% task completion vs 70% without AI) came when AI was used as a partner, not an autopilot. That underscores the practice of keeping humans in the loop and using AI as an assistant for maximum effect. By applying structured approaches and continuously learning as a team, you ensure the experience, expertise, authority, and trust (E-E-A-T) in your development process remain high, even as AI plays a bigger role.

Agent Solutions: Types and Architectures of AI Agents

AI agents are rapidly transforming the landscape of modern business operations by automating time-consuming tasks, driving data analysis, and delivering intelligent solutions that boost both customer satisfaction and operational efficiency. The diversity of agent solutions available today means organizations can deploy specialized AI agents tailored to their unique needs—whether it’s handling customer queries, monitoring network security, or optimizing supply chains.

Types of AI Agents:There are several key types of AI agents, each designed to tackle specific challenges:

- Customer Agents: These AI-powered agents interact directly with customers, automating responses to customer queries, providing context-aware answers, and enhancing the overall customer experience. By leveraging natural language processing and large language models, customer agents can resolve issues quickly and accurately, often with minimal human input.

- Employee Agents: Focused on supporting internal teams, employee agents automate repetitive or time-consuming tasks, such as scheduling, onboarding, or internal support, freeing human teams to focus on higher-value work.

- Code Agents: These agents assist developers by automating code generation, bug detection, and documentation, streamlining the software development lifecycle.

- Data Agents: Specialized in data analysis, these agents sift through vast amounts of data to detect unusual patterns, generate insights, and support decision making.

- Security Agents: Tasked with network security and compliance monitoring, security agents use machine learning to detect fraud, monitor for compliance breaches, and respond to threats in real time.

Why AI Agents Matter:AI agents matter because they can execute complex tasks that would otherwise require significant human effort. By automating routine and analytically intensive processes, AI agents not only reduce operational costs but also enable human teams to focus on strategic initiatives. Deploying AI agents leads to improved customer satisfaction, faster response times, and increased operational efficiency—key advantages in today’s competitive landscape.

Architectures and Deployment:Deploying AI agents can be achieved through various architectures, with multi-agent systems being particularly powerful. In a multi-agent system, multiple specialized agents interact with each other and with external systems, collaborating to solve complex problems. For example, in a supply chain scenario, one agent might handle inventory tracking while another predicts demand, and a third optimizes logistics—together, they deliver a seamless, AI-powered solution.

**Industry Applications:**AI-powered agent solutions are making a significant impact across industries:

- Financial Institutions: AI agents automate compliance monitoring, detect fraud, and analyze transaction data, reducing risk and operational costs.

- Healthcare: Intelligent agents assist medical professionals by analyzing patient data, flagging anomalies, and supporting diagnostic decisions. AI agents are also used to assist physicians with diagnosis by analyzing patient symptoms, medical histories, and lab results.

- E-commerce and Supply Chains: AI agents analyze customer behavior, predict demand, and optimize inventory tracking. According to the World Economic Forum, deploying AI agents in supply chains can increase average order value by 15% and reduce operational costs by 10%.

- Financial Institutions: AI agents automate compliance monitoring, detect fraud, and analyze transaction data, reducing risk and operational costs.

- Healthcare: Intelligent agents assist medical professionals by analyzing patient data, flagging anomalies, and supporting diagnostic decisions.

- E-commerce and Supply Chains: AI agents analyze customer behavior, predict demand, and optimize inventory tracking. According to the World Economic Forum, deploying AI agents in supply chains can increase average order value by 15% and reduce operational costs by 10%.

Specialized and Autonomous Agents:Some agent solutions go beyond basic automation. Intelligent agents and autonomous agents, powered by advanced AI models and generative AI, can execute complex tasks such as fraud detection, compliance monitoring, and even real-time decision making. These specialized agents leverage machine learning and natural language processing to analyze historical data, detect unusual patterns, and provide actionable insights with minimal human intervention.

Key Advantages:The adoption of AI agents brings measurable benefits:

- Improved customer satisfaction through faster, more accurate responses and personalized experiences.

- Enhanced operational efficiency by automating time-consuming tasks and optimizing workflows.

- Reduced operational costs as AI agents handle high-volume, repetitive work that would otherwise require large human teams.

In summary, agent solutions—ranging from customer-facing chatbots to back-end security monitors—are revolutionizing enterprise operations. By deploying AI agents and leveraging multi-agent systems, organizations can automate tasks, enhance customer experience, and achieve new levels of efficiency and innovation.

Managing AI Workloads: Orchestrating and Scaling AI Services in Full-Stack Teams

Effectively managing AI workloads is essential for full-stack teams aiming to harness the full potential of AI agents. As organizations increasingly rely on AI technologies like machine learning and natural language processing, orchestrating and scaling AI services becomes a critical part of delivering robust, efficient, and scalable solutions. Remote monitoring AI agents track real-time patient vitals such as heart rate, glucose levels, and oxygen saturation through connected devices, demonstrating how AI workloads can directly impact critical operations in healthcare and beyond.

What Are AI Workloads?AI workloads encompass the data processing, computational resources, and operational requirements needed to run AI models and agents. These workloads can range from real-time data analysis and model inference to large-scale training and decision making. Managing these workloads efficiently ensures that AI agents can execute complex tasks—such as data analysis, customer support, and fraud detection—without bottlenecks or downtime.

Orchestration and Scaling in Full-Stack Teams:Full-stack teams, which bring together data scientists, engineers, and domain experts, must collaborate closely to orchestrate and scale AI services. This involves:

- Data Management: Collecting, processing, and storing vast amounts of relevant data points, ensuring that AI agents have access to high-quality, up-to-date information for analysis and decision making.

- Model Management: Developing, deploying, and monitoring AI models, including large language models and specialized machine learning algorithms. This ensures that agent solutions remain accurate, reliable, and aligned with business goals.

- Workflow Management: Orchestrating the flow of data and tasks between different AI services and agents, scaling resources as needed to handle fluctuating workloads and maintain optimal performance.

The Role of AI Services and Platforms:Cloud-based AI services, such as those offered by Google Cloud, provide the infrastructure needed to manage AI workloads at scale. These platforms offer tools for data storage, model training, and deployment, enabling teams to focus on building intelligent systems rather than managing hardware. By leveraging these AI services, organizations can quickly scale up or down based on demand, ensuring that AI agents remain responsive and effective.

Collaboration Between Human and AI Agents:Managing AI workloads is not just about technology—it’s about people, too. Human agents and AI agents must work together to execute complex tasks, with humans providing oversight, strategic direction, and domain expertise, while AI agents handle data-driven, repetitive, or analytically intensive work. This synergy allows teams to deliver better results, faster.

Market Growth and Business Impact:The importance of managing AI workloads is underscored by the rapid growth of the AI market, which is projected to see a compound annual growth rate (CAGR) of 33% from 2023 to 2028. This surge is driven by the widespread adoption of AI technologies across industries, as organizations seek to improve customer satisfaction, increase operational efficiency, and reduce operational costs.

Proven Results:Research reports highlight the tangible benefits of effective AI workload management:

- Customer satisfaction can improve by up to 25% as AI agents deliver faster, more accurate service.

- Operational efficiency can increase by 30% through intelligent automation and optimized workflows.

- Operational costs can be reduced by 20% as AI agents take on high-volume, time-consuming tasks.

Key Takeaways:By orchestrating and scaling AI services effectively, full-stack teams can ensure that AI agents deliver maximum value—executing complex tasks, analyzing data, and supporting decision making with minimal human intervention. The result is a more agile, efficient, and customer-centric organization, ready to thrive in the era of intelligent automation.

FAQs

Q1: What are AI agents in software development teams?